Google Cloud leveraging Nvidia could bring $2 billion sales bump

This analysis is by Bloomberg Intelligence Senior Industry Analyst Mandeep Singh and Associate Analyst Nishant Chintala. It appeared first on the Bloomberg Terminal.

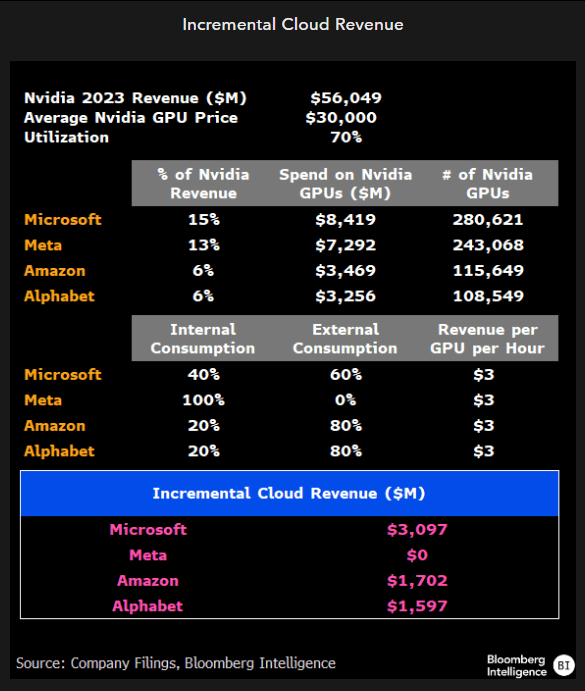

Google Cloud could see a sales boost of at least $2 billion from training and inferencing generative-AI workloads in 2025, helping drive a faster-than-expected acceleration in profitability. The company appears well positioned to leverage a higher portion of its Nvidia processing-unit allocation for its enterprise customers vs. other hyperscalers such as Meta and Amazon.com.

Google Cloud could get sales boost of 400-500 Bps

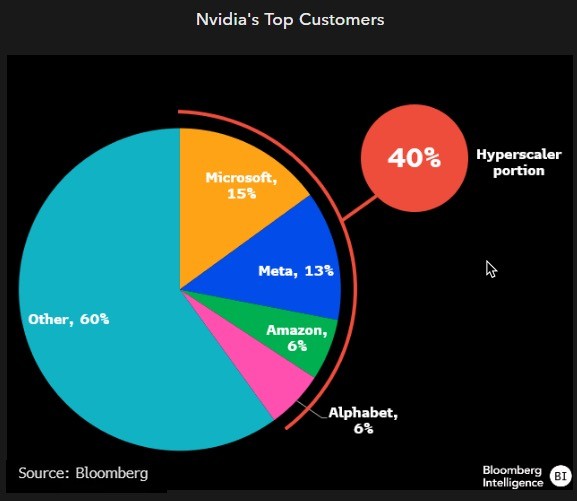

Though Alphabet has half the allocation of Nvidia’s graphics processing units (GPUs) vs. hyperscalers including Meta and Microsoft, its internal use of GPU compute for training and inferencing workloads is far lower, based on our calculation. Google Cloud is already at an annual sales run rate of $35 billion, with AI workloads poised to contribute at least 400-500 bps in growth for the segment in 2025, excluding potential revenue contribution from Duet AI copilot and Gemini licensing and subscriptions.

Microsoft has seen a similar boost to its Azure segment from AI-inferencing workloads. Given Microsoft’s close alliance with OpenAI, we believe other foundational-model companies aren’t training their large language models (LLMs) on its cloud.

Incremental margin for AI workloads

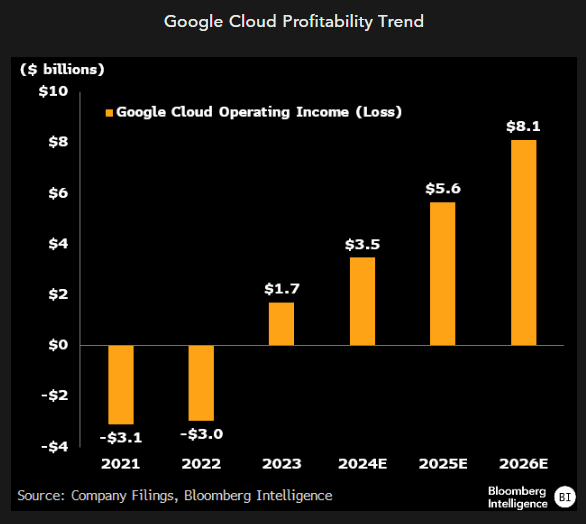

Google’s operating-profit growth appears likely to accelerate, driven by the higher incremental margin of generative-AI workloads. Google Cloud is already at an operating-profit run rate of $3.2 billion for 2024, compared with $3 billion annual losses in 2021 and 2022. The company may have an advantage in capturing share in gen-AI workloads that require different types of compute vs. those for traditional servers. We believe Google is in a strong position to narrow the gap with Amazon AWS and Microsoft Azure by leveraging its own TPU-chip architecture, which could quicken the ramp-up of Google Cloud profitability through 2024-25.

Nvidia GPU compute availability

Alphabet appears well positioned to monetize a larger portion of its Nvidia GPU capacity for its Cloud-segment customers compared with other hyperscalers that use the GPU compute capacity for training their own foundational models. Most of Google’s internal gen-AI workloads are optimized for performance on its own TPU accelerator chips. Google Cloud is likely to offer a full suite of products for training large language models, which can cost up to $100 million and may get more expensive amid growing complexity and increasing parameters within these models.

Security could be a differentiator

Security could be another offering that sets Google Cloud apart from other hyperscalers as companies seek to consolidate suppliers. Similar to Microsoft’s traction with bundling its security offerings with Azure cloud infrastructure, we believe Google Cloud may see a pickup in adoption driven by the native security offered on its cloud. The company’s recent Cloud Next event featured a number of security capabilities, which we believe could become a differentiating factor as enterprises seek to deploy generative AI.

This is not an investment recommendation. Investors are advised to consult a licensed investment professional before making investment decision. Past investment performance is no guarantee of future price appreciation or performance.