ARTICLE

Corporate AI capex ready to rocket with focus on the big payoff

Bloomberg Intelligence

This analysis is by Bloomberg Intelligence Industry Analyst Woo Jin Ho. It appeared first on the Bloomberg Terminal.

The ramp-up in corporate AI spending in 2025 will include a greater focus on deploying applications and components that help run AI systems faster and efficiently, a process known as inference, to monetize or drive productivity from their investments. While Nvidia and AMD remain the leading beneficiaries, our biggest takeaways from the Super Computing SC24 conference are that the rise of inference workloads should create opportunities for vertically integrated AI vendors Groq, SambaNova and Cerebras, while Vast Data and NetApp should gain as the data foundation for AI deployments.

Enterprises ready to take an even bigger AI leap in 2025

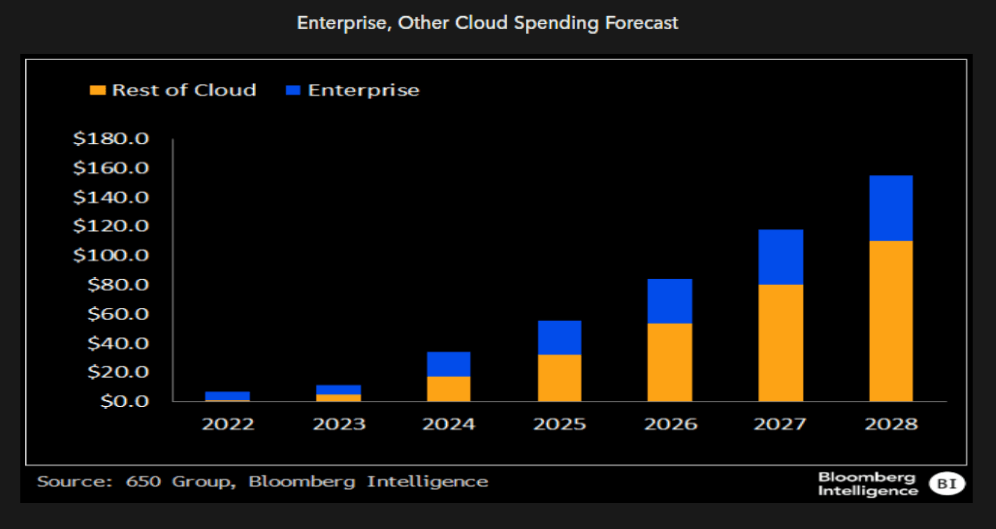

Enterprise customers may start to make bigger AI investments in 2025 following the development and proof-of-concept work in 2024, based on our conversations at the weeklong SC24 conference in Atlanta. Unlike hyperscale cloud customers, which have been focused on investing in the infrastructure to train larger language foundation models, enterprises are likely to place greater focus on fine-tuning models and building inference models as customers aim to monetize or drive the productivity gains from AI spending.

The bulk of AI equipment spending will continue to favor AI training due to the relative size and scale of these deals. But the number of model fine-tuning and output-based AI inference deployments should ramp up in earnest in 2025 with the scope to inflect in 2026.

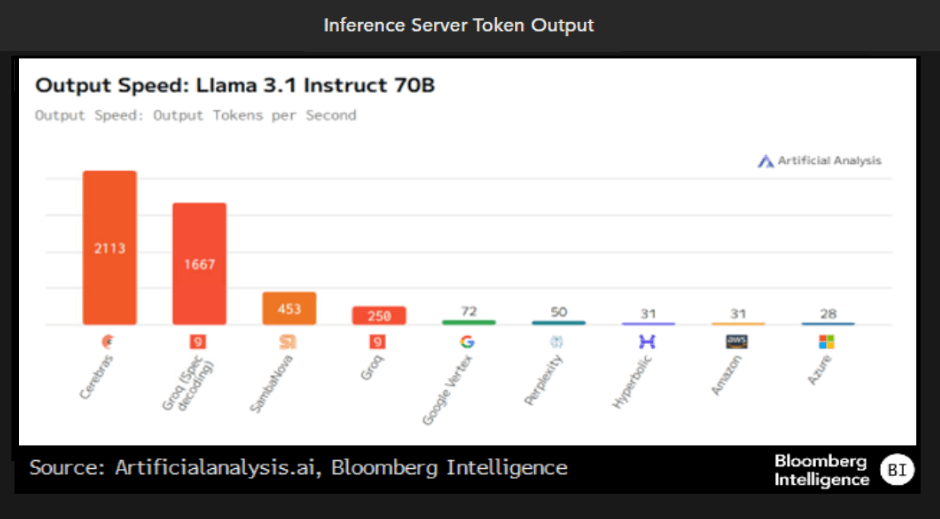

Inference offers Cerebras, Groq, SambaNova opportunities

The growing focus on inference should provide opportunities for vertically integrated AI vendors Cerebras, Groq and SambaNova. This group will be smaller niche player behind Nvidia and AMD due to their relative size and the risk-averse nature of some enterprises. While each of these companies has a differentiated approach to processing tokens (a unit of AI), they have several advantages over a distributed GPU-based system from Nvidia or AMD.

These AI systems tick the three key boxes — power, performance and price — that should appeal to enterprises. The price-to-performance advantages are straightforward. These systems draw 10-40 kW of power vs. 100-140 kW for a fully loaded Nvidia Blackwell rack, allowing these systems to be deployed in traditional data centers that lack liquid cooling.

Vast Data, NetApp, Pure Storage tame AI data tsunami

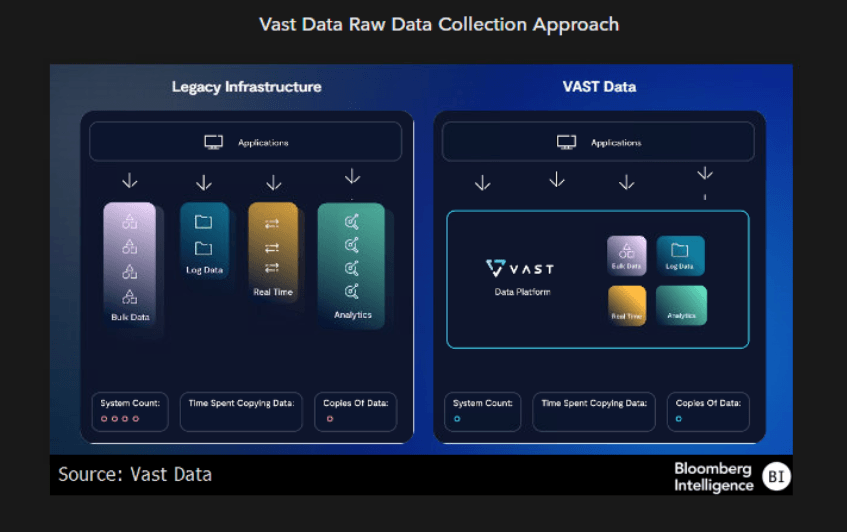

Data access, quality and management should play a bigger role in AI inference workloads, which should benefit leading storage vendors NetApp, Pure Storage and privately held Vast Data. AI systems require an independent storage infrastructure to support workloads for data quality reasons and to help GPU utilization rates. The rise of retrieval-augmented generation, or RAG, aids large language model accuracy and should drive a broader AI storage adoption in 2025.

Vast has emerged as one of the more intriguing storage vendors since its file system is built for machine learning and AI as it aims to unify disparate pools of storage onto a single platform. This approach aims to streamline the data pipeline and has become the preferred storage partner with leading GPU-clouds such as Lambda Labs, Coreweave and Core42.

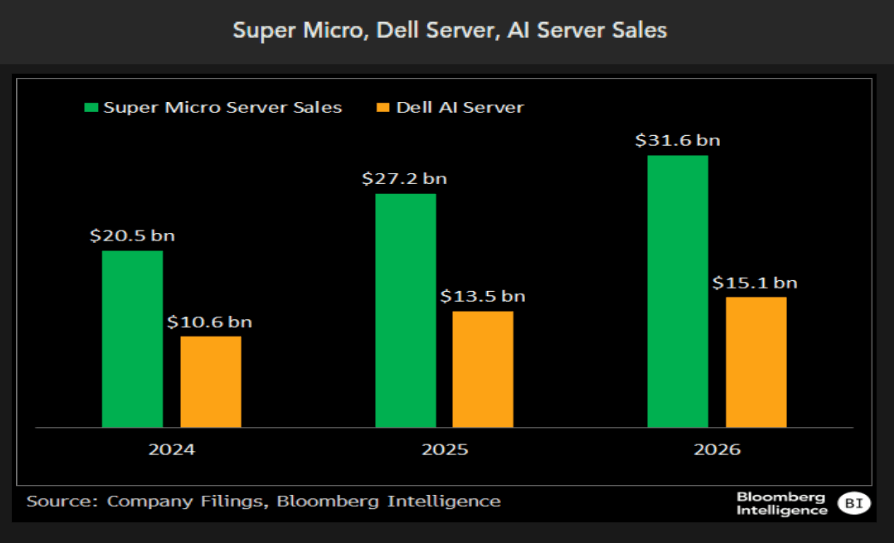

Super Micro fallout rumblings; Dell, Penguin possible gainers

Our discussions at Super Compute 24 suggest growing customer concerns from the scrutiny surrounding Super Micro’s 10-K and 10-Q filing delays, and some may be starting to explore alternative vendors. The AI server competitive landscape has intensified over the past few quarters, with electronic manufacturer service providers such as Ingrasys and Winstron, able to build AI systems for Dell or other server vendors. It also potentially opens opportunities for AI system designer Penguin Solutions, which can design, build and ship at scale and is largely US-based.

Any exodus from Super Micro’s filing overhang won’t be immediate, and any impact on sales may be two to four quarters away. But given its exposure to deals in the eight- to ten-figure dollar range, competitive shifts could have a sizable impact on results and outlook.

The data included in these materials are for illustrative purposes only. The BLOOMBERG TERMINAL service and Bloomberg data products (the “Services”) are owned and distributed by Bloomberg Finance L.P. (“BFLP”) except (i) in Argentina, Australia and certain jurisdictions in the Pacific Islands, Bermuda, China, India, Japan, Korea and New Zealand, where Bloomberg L.P. and its subsidiaries (“BLP”) distribute these products, and (ii) in Singapore and the jurisdictions serviced by Bloomberg’s Singapore office, where a subsidiary of BFLP distributes these products. BLP provides BFLP and its subsidiaries with global marketing and operational support and service. Certain features, functions, products and services are available only to sophisticated investors and only where permitted. BFLP, BLP and their affiliates do not guarantee the accuracy of prices or other information in the Services. Nothing in the Services shall constitute or be construed as an offering of financial instruments by BFLP, BLP or their affiliates, or as investment advice or recommendations by BFLP, BLP or their affiliates of an investment strategy or whether or not to “buy”, “sell” or “hold” an investment. Information available via the Services should not be considered as information sufficient upon which to base an investment decision. The following are trademarks and service marks of BFLP, a Delaware limited partnership, or its subsidiaries: BLOOMBERG, BLOOMBERG ANYWHERE, BLOOMBERG MARKETS, BLOOMBERG NEWS, BLOOMBERG PROFESSIONAL, BLOOMBERG TERMINAL and BLOOMBERG.COM. Absence of any trademark or service mark from this list does not waive Bloomberg’s intellectual property rights in that name, mark or logo. All rights reserved. © 2024 Bloomberg.