Bloomberg Intelligence

This analysis is by Bloomberg Intelligence Senior Industry Analyst Mandeep Singh. It appeared first on the Bloomberg Terminal.

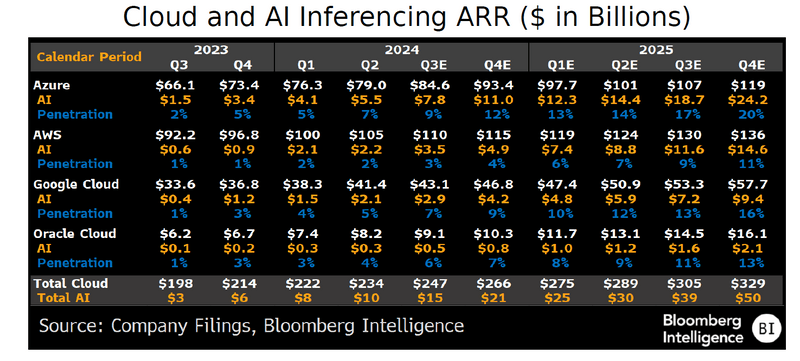

Hyperscale-cloud sales of $235 billion getting a boost from generative- AI workload contributions, coupled with increased reasoning capabilities for pre-trained models, could drive faster growth in inferencing relative to the larger training market. We believe techniques such as quantization and distillation may gain momentum to shrink the size of trained models for more privacy-centric use cases, including personal assistants and AI agents.

Cloud vs. on-device inferencing

Microsoft has already quantified about 7 percentage points of gen-AI workload contribution, primarily from inferencing, to its Azure revenue growth. This equates to about a $5 billion revenue run rate. Other hyperscalers, including Amazon.com and Google, also have multiple billion dollars in gen-AI inferencing workload revenue. Apple Intelligence aims to boost the use of on-device inferencing, which doesn’t require application programming interface (API) calls to large language model (LLM) providers.

Hyperscale cloud’s $235 billion revenue could get a lift from inferencing workloads running on the cloud vs. on the edge natively on PCs and smartphones. In the case of Apple Intelligence, on-device LLM may decide to offload certain complex tasks to more powerful models hosted in Apple’s data centers (private cloud compute).

Reasoning vs. smaller models

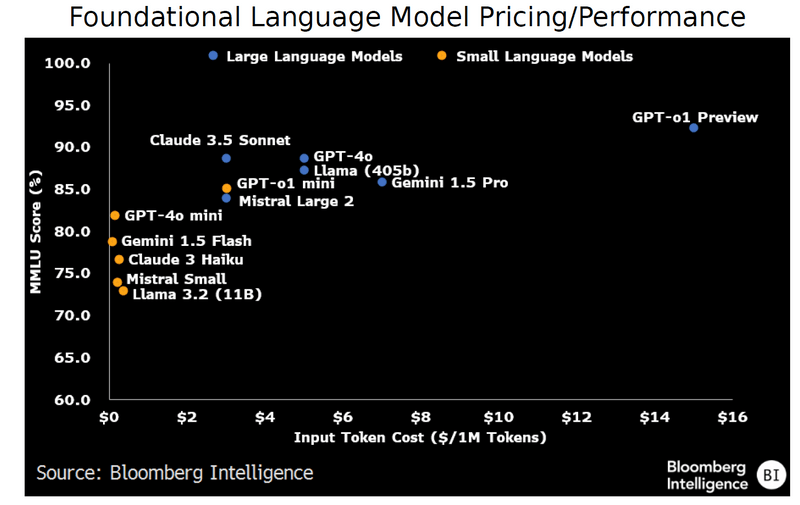

A pivot to smaller models with fewer parameters, which can be used for specific tasks vs. LLMs, will likely fuel a secular shift in applications to agent functionality. OpenAI released its “chain-of-reasoning” o1 model, along with an o1-mini version, to showcase improvements in reasoning capabilities. Given the difference in pricing across various versions of LLMs, we believe companies may mix LLMs from different providers, depending on the query.

A small, low latency AI model (5-10B parameters) will be included in iOS18 as part of the Apple Intelligence framework, which will be able to understand user commands, the current screen and take actions on apps. It can handle tasks like summarization, as well as power the “AI agent” features of Siri, including user commands that require utilizing multiple apps.

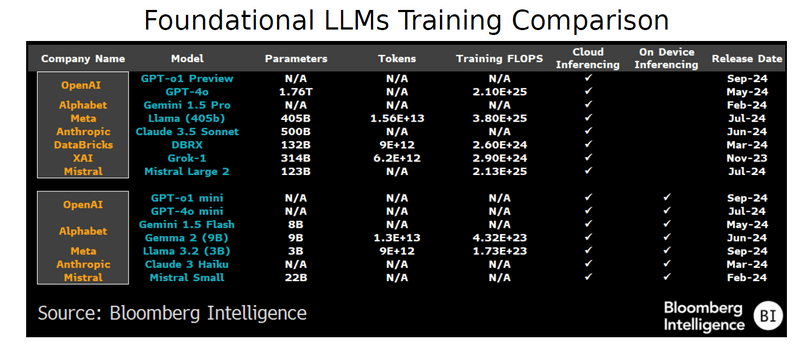

Shrinking LLM parameters for on-device inferencing

As LLMs grow exponentially larger, the number of floating point operations (FLOPs) is expanding amid parameter counts that demand significantly greater computational resources. To offset rising LLM costs, foundational model companies will seek to shrink the size of trained models for lowering inferencing costs for broader use cases across enterprise and consumer applications.

Most foundational model providers including OpenAI GPT, Anthropic Claude, Google Gemini, Meta Llama and Mistral have released smaller versions using quantization and distillation for edge use cases to run LLMs natively on PC and smartphone devices. We believe shrinking the parameter size of models will become more important amid continuous scaling of datasets and tokens used in the transformer architecture that underpins most foundational LLMs.

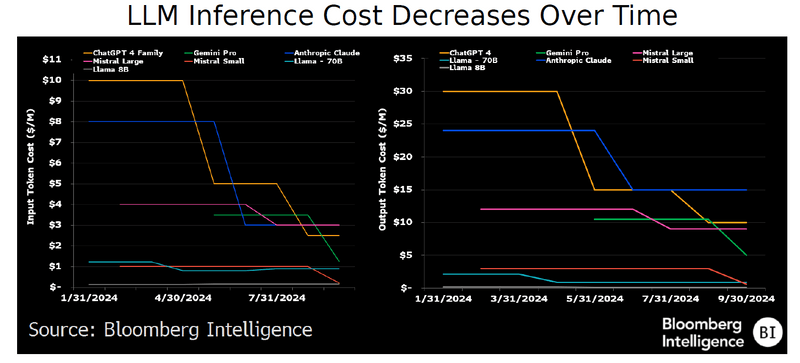

Inferencing efficiency aids margin

Inference efficiency will likely become a big focus given the high training costs and reliance on the latest GPU chips for performance and smarter power use. We believe most companies will seek ways to increase the utilization of their existing compute infrastructure — using both custom chips and smaller models — while lowering their total inferencing costs.

Even amid the elevated spending expectations of hyperscale-cloud providers, we believe a corresponding increase in cloud sales and the use of lowest-cost model should be a driver of margin for cloud providers. This is as the inferencing market continues to expand beyond coding copilots and customer-service chatbots, and into other types of applications and use cases.

The data included in these materials are for illustrative purposes only. The BLOOMBERG TERMINAL service and Bloomberg data products (the “Services”) are owned and distributed by Bloomberg Finance L.P. (“BFLP”) except (i) in Argentina, Australia and certain jurisdictions in the Pacific Islands, Bermuda, China, India, Japan, Korea and New Zealand, where Bloomberg L.P. and its subsidiaries (“BLP”) distribute these products, and (ii) in Singapore and the jurisdictions serviced by Bloomberg’s Singapore office, where a subsidiary of BFLP distributes these products. BLP provides BFLP and its subsidiaries with global marketing and operational support and service. Certain features, functions, products and services are available only to sophisticated investors and only where permitted. BFLP, BLP and their affiliates do not guarantee the accuracy of prices or other information in the Services. Nothing in the Services shall constitute or be construed as an offering of financial instruments by BFLP, BLP or their affiliates, or as investment advice or recommendations by BFLP, BLP or their affiliates of an investment strategy or whether or not to “buy”, “sell” or “hold” an investment. Information available via the Services should not be considered as information sufficient upon which to base an investment decision. The following are trademarks and service marks of BFLP, a Delaware limited partnership, or its subsidiaries: BLOOMBERG, BLOOMBERG ANYWHERE, BLOOMBERG MARKETS, BLOOMBERG NEWS, BLOOMBERG PROFESSIONAL, BLOOMBERG TERMINAL and BLOOMBERG.COM. Absence of any trademark or service mark from this list does not waive Bloomberg’s intellectual property rights in that name, mark or logo. All rights reserved. © 2024 Bloomberg.