ARTICLE

AI data center workload pivot favors databases over applications

Bloomberg Intelligence

This article was written by Bloomberg Intelligence senior industry analyst Mandeep Singh and associate analyst Robert Biggar. It appeared first on the Bloomberg Terminal.

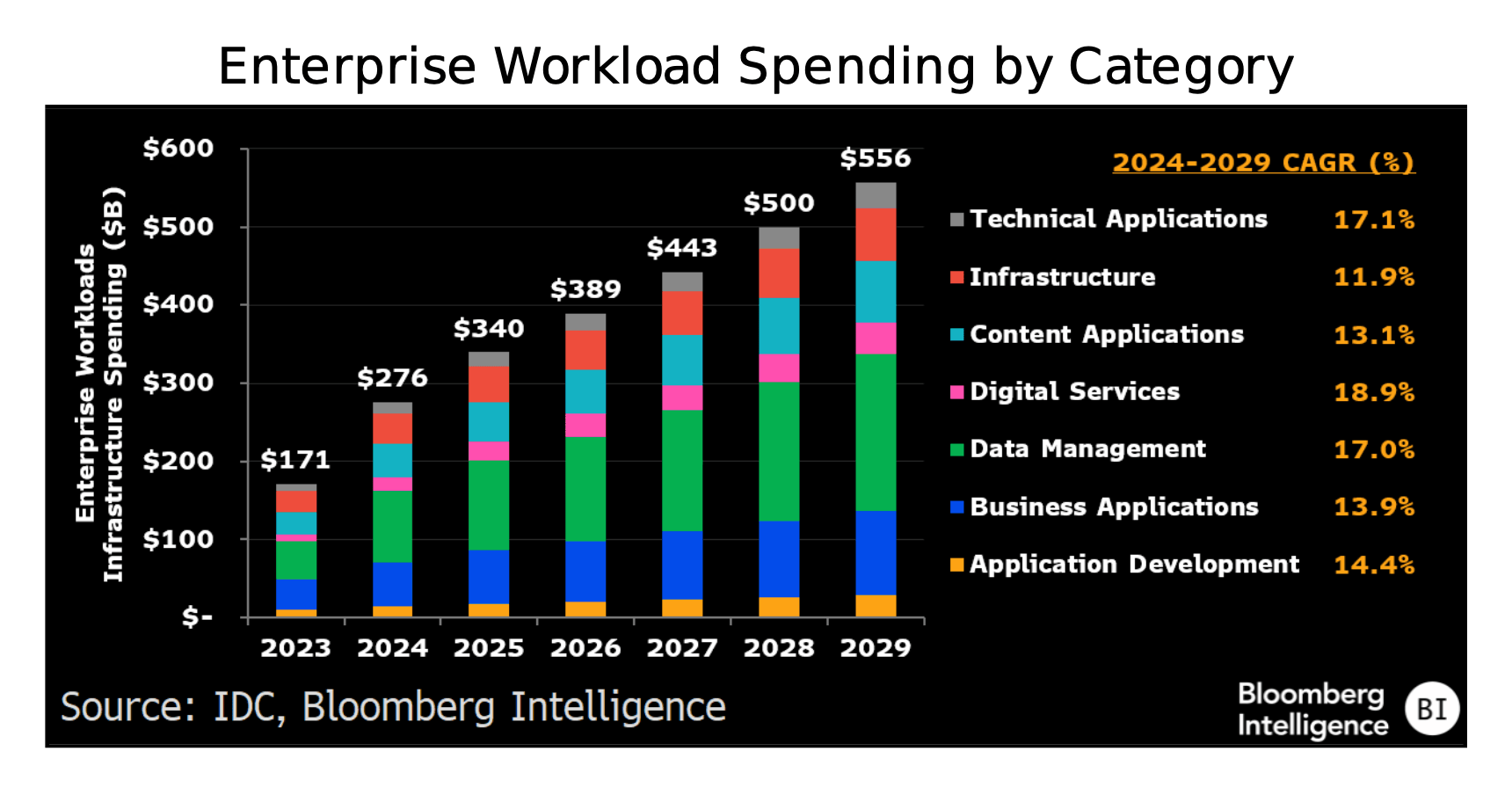

AI’s shift to inference at scale from model development is tilting data-center demand toward databases — especially those used by chatbots, coding agents and other AI agents — and away from app workloads like customer relationship management, benefiting software providers such as Oracle and MongoDB. Content delivery and cybersecurity workloads should have faster growth in AI data centers given their role in agent deployment.

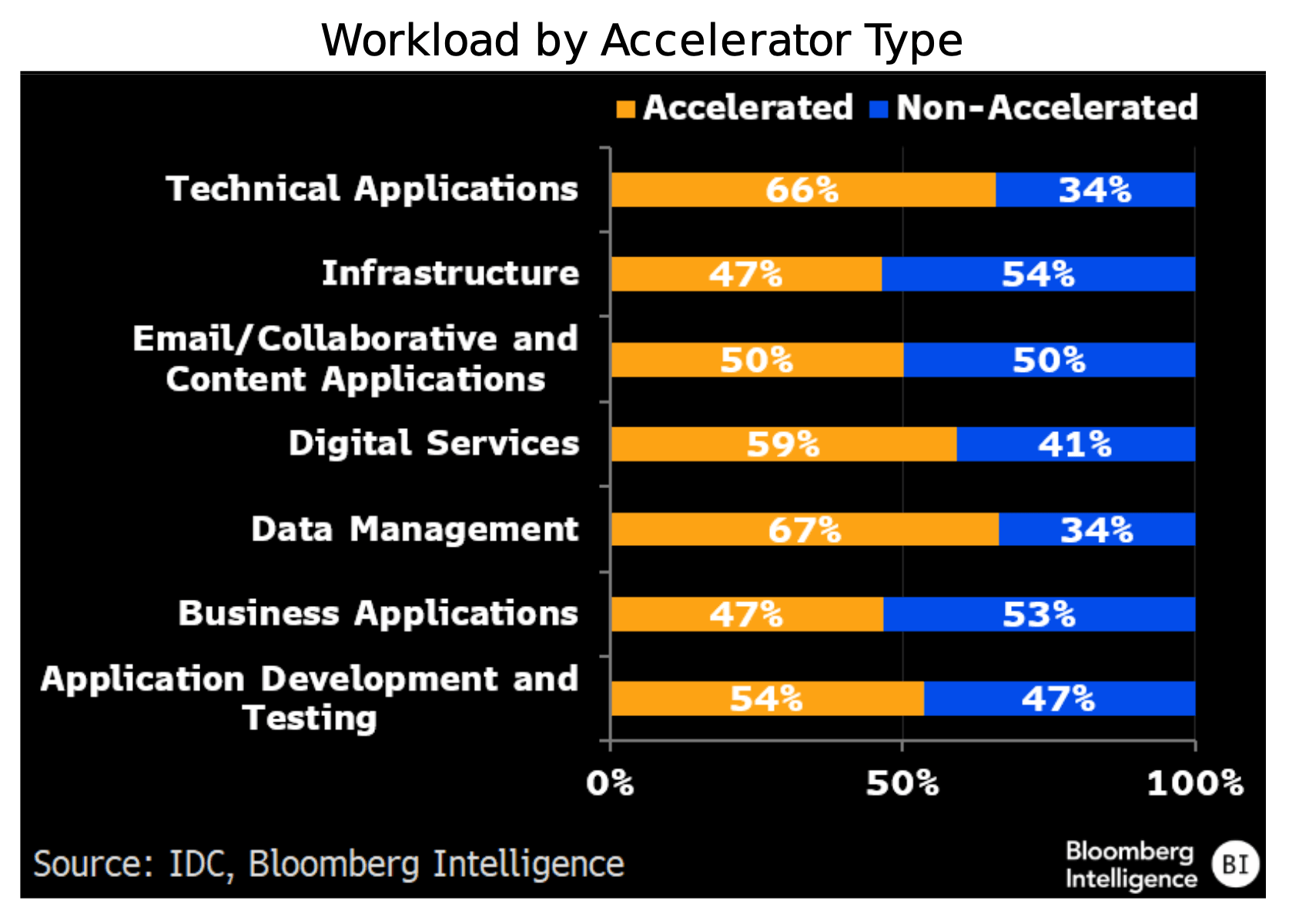

Tokenizing enterprise data to feed chatbots, coding agents and other AI agents should drive above-trend growth for vector databases (stores of unstructured company data). As business functions integrate AI models with proprietary corporate data, software providers like Oracle and MongoDB may see accelerated demand. Beyond the growth in training data, we expect an inflection in database creation and data consumption as agents proliferate across use cases.

Given the intensive iteration behind advanced capabilities, enterprises will rely on accelerator-rich data centers for high workload performance at the database layer. With matrix multiplication at the core of large-language-model inferencing, vector databases are poised to be among the largest workload categories, enabling scale and faster responses.

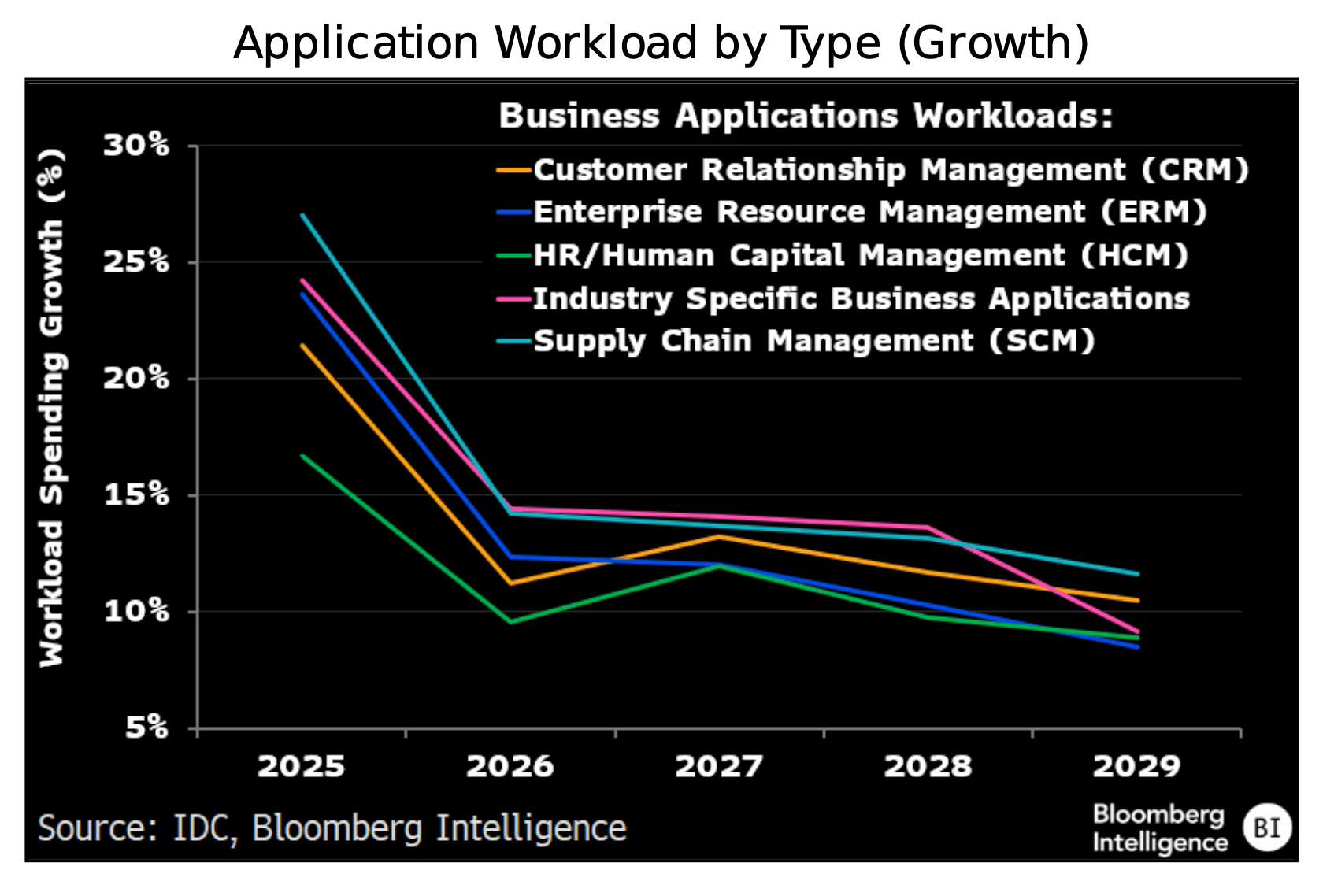

Slowdown in application workloads ahead

As AI agents automate more steps in everyday workflows, less of that work needs to run inside large application suites. That points to slower growth in data-center demand for enterprise resource planning, customer relationship management, human capital management and supply-chain management software. Reasoning-model agents and deep research tools can now autonomously browse the web, pull sources and run analyses on their own — tasks that previously lived in those apps’ user interfaces.

Engineering software — computer-aided design and computer-aided manufacturing — may skirt these headwinds, as simulation and synthetic-data creation keep workloads anchored in specialized tools.

Coding agents supercharge testing workloads

AI coding agents — assistants inside developer tools that suggest, write and fix code — should give a big boost to application development and testing workloads. Agents from Cursor, Anthropic’s Claude Code, GitHub Copilot, OpenAI’s Codex and Gemini Code Assist handle tasks like debugging and appending to existing code. Companies report 30-40% productivity gains on new code written with these agents, which should channel more development and testing to AI data centers. Prompt-based code generation is quickly becoming one of the most-used generative-AI features in existing business applications.

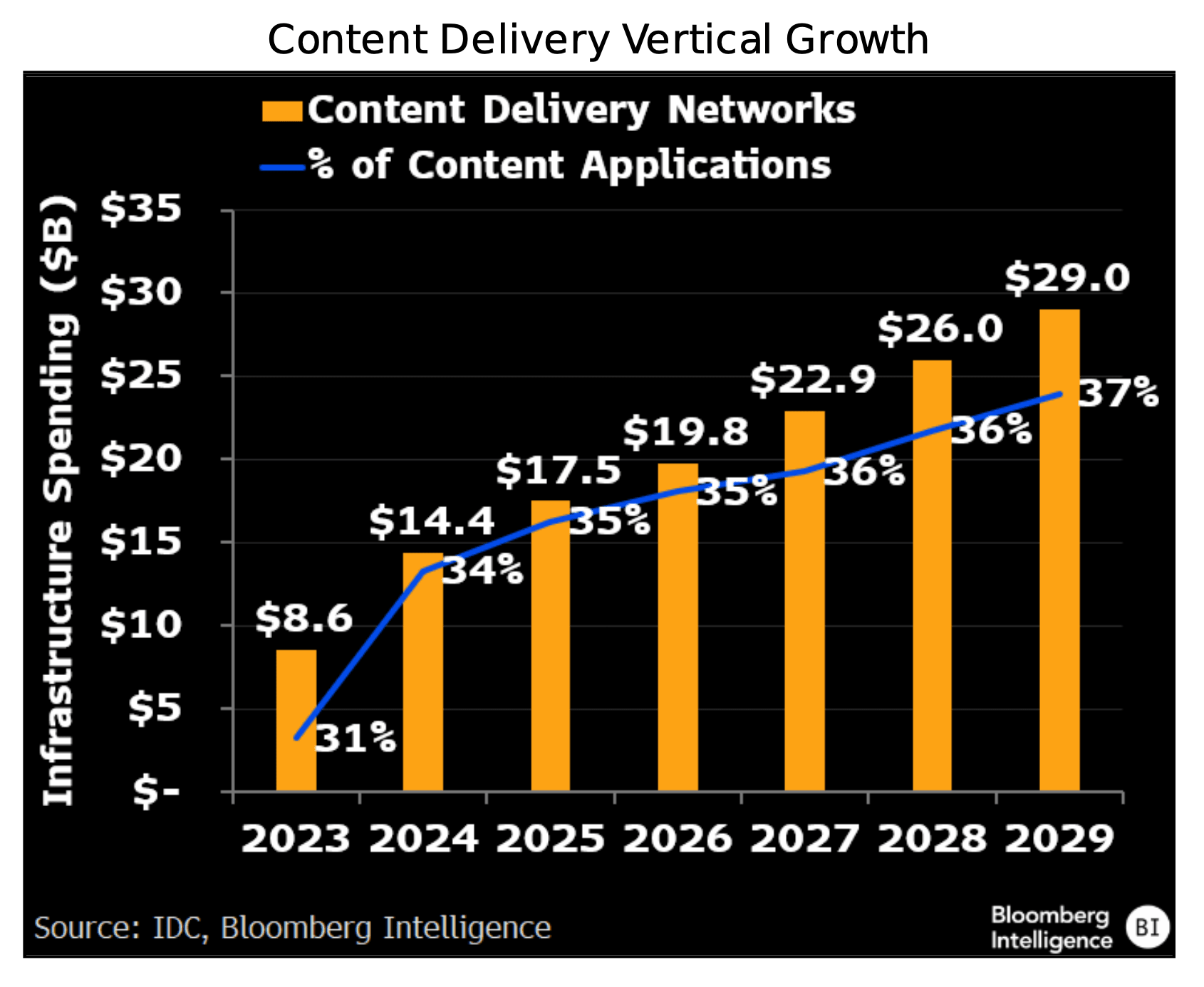

Content delivery, cybersecurity also benefit

As autonomous AI agents plug into business workflows, more mission-critical tasks will run in AI data centers. The rise of reasoning models like OpenAI’s o3 shifts the focus to ensuring that infrastructure is fast, efficient and reliable from simply having a model. That’s a tailwind for content delivery networks (CDNs) from companies like Cloudflare and cybersecurity providers such as Zscaler. Most companies seek to integrate internal knowledge databases and documentation with LLMs while relying on CDN and cybersecurity vendors to manage token consumption for LLM fine-tuning and inferencing.

The data included in these materials are for illustrative purposes only. The BLOOMBERG TERMINAL service and Bloomberg data products (the “Services”) are owned and distributed by Bloomberg Finance L.P. (“BFLP”) except (i) in Argentina, Australia and certain jurisdictions in the Pacific Islands, Bermuda, China, India, Japan, Korea and New Zealand, where Bloomberg L.P. and its subsidiaries (“BLP”) distribute these products, and (ii) in Singapore and the jurisdictions serviced by Bloomberg’s Singapore office, where a subsidiary of BFLP distributes these products. BLP provides BFLP and its subsidiaries with global marketing and operational support and service. Certain features, functions, products and services are available only to sophisticated investors and only where permitted. BFLP, BLP and their affiliates do not guarantee the accuracy of prices or other information in the Services. Nothing in the Services shall constitute or be construed as an offering of financial instruments by BFLP, BLP or their affiliates, or as investment advice or recommendations by BFLP, BLP or their affiliates of an investment strategy or whether or not to “buy”, “sell” or “hold” an investment. Information available via the Services should not be considered as information sufficient upon which to base an investment decision. The following are trademarks and service marks of BFLP, a Delaware limited partnership, or its subsidiaries: BLOOMBERG, BLOOMBERG ANYWHERE, BLOOMBERG MARKETS, BLOOMBERG NEWS, BLOOMBERG PROFESSIONAL, BLOOMBERG TERMINAL and BLOOMBERG.COM. Absence of any trademark or service mark from this list does not waive Bloomberg’s intellectual property rights in that name, mark or logo. All rights reserved. © 2025 Bloomberg.