Bloomberg’s AI & Quant Researchers Publish 2 Papers at NeurIPS 2025

December 02, 2025

At the Thirty-Ninth Annual Conference on Neural Information Processing Systems (NeurIPS 2025) in San Diego this week (December 2-7, 2025), researchers from Bloomberg’s AI Engineering group and the firm’s Quant Research team in the Office of the CTO, are showcasing their expertise in artificial intelligence and machine learning by publishing one paper in the main conference and one paper in the Generative AI in Finance Workshop. Through these papers, the authors and their research collaborators highlight a variety of novel approaches to financial modeling, time-series forecasting, and pricing tasks.

In addition, AI engineer Sachith Sri Ram Kothur is delivering a sponsored talk about code generation and semantic parsing, during which he will discuss the potential of these technologies to democratize access and empower users to perform complex financial analyses and analytics.

We asked some of our authors and speakers to summarize their research and explain why their results were notable:

Semantic Parsing at Bloomberg

Sachith Sri Ram Kothur

Exhibitor Spot Talks Session 1 – Mezzanine Room 15AB (Tuesday, December 2 @ 1:30–1:42 PM PST)

Why did you choose to talk about Semantic Parsing as your topic?

Semantic parsing is an important topic that powers various AI applications at Bloomberg. Semantic parsing has evolved over the years with the advent of LLMs and so have the various products we are building at Bloomberg to understand our client needs more deeply and serve them more effectively.

What are you hoping attendees take away from your talk?

I’d like the audience to be aware of the multitude of improvements we are doing on the Bloomberg Terminal using AI, and especially in the field of semantic parsing. Also, I’d like them to understand that Bloomberg is a very invested partner, as well as a contributor in open source developments in the field of semantic parsing. I’ll be discussing our recent research work and publications on the STARQA dataset, as well as post-hoc calibration of text-to-SQL LLMs.

Learning from Interval Targets

Rattana Pukdee* (Carnegie Mellon University), Ziqi Ke (Bloomberg), Chirag Gupta (Bloomberg)

San Diego Poster Session 4 – Exhibit Hall C,D,E #3014 (Thursday, December 4 @ 4:30-7:30 PM PST)

Please summarize your research. Why are your results notable?

Chirag: The problem we’re looking at in this paper is regression, which comes up in forecasting prices of real-valued targets like stocks or bonds. Traditionally, regression uses data in the form of features and target values. However, our setup is harder, where only intervals around the targets are available. This setup could arise when the target label is expensive to obtain or there are inherent uncertainties related to the target. One example might occur when only bid and ask quotes are available for a financial instrument that has not recently traded at a concrete price.

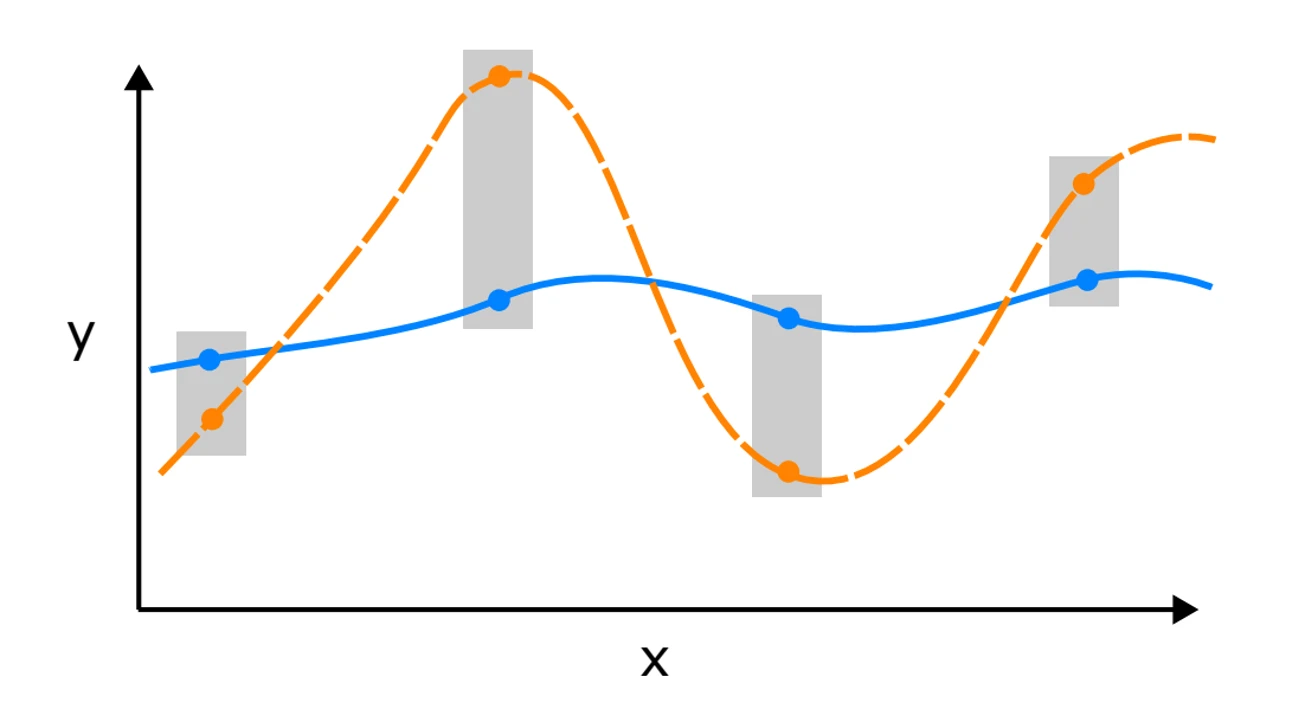

Here’s an example of such a setup. The X-axis is a feature, and the vertical gray bars are intervals that contain the true Y-axis target values at different X-values. The key challenge for learning such a curve is that there are multiple fits inside the gray bars. If one applies an “Occam’s Razor” principle in this setting, the solid blue line might appear to be a “simpler explanation” than the dashed orange line. We formulate this as a Lipschitz smoothness constraint on the curve and design two strategies for learning the curve.

The ability to learn from interval targets enables us to broaden the set of signals we can use for financial modeling, leading to more accurate pricing solutions that react faster to market movements.

How does your research advance the state-of-the-art in the field?

We validate our approach both theoretically and empirically. On the theoretical front, we relax some strong assumptions in prior works. Specifically, we do not need to assume that 1) the optimal regression function is inside the function class (called the “unrealizable” setting), or that 2) the intervals uniquely define a single target value in the limit (called a large “ambiguity degree”). Empirically, we perform extensive experiments on real-world datasets and show that our methods achieve state-of-the-art performance.

*work done during the author’s Bloomberg Data Science Ph.D. Fellowship

Make it happen here.

DELPHYNE: A Pre-Trained Model for General and Financial Time Series

Xueying Ding** (Carnegie Mellon University), Aakriti Mittal (Bloomberg) and Achintya Gopal

Generative AI in Finance Workshop – Upper Level Room 3 | Best Paper Award Session (Saturday, December 6 @ 11:35 AM – 12:35 PM PST)

Please summarize your research. Why are your results notable?

Aakriti: The era of transformers has changed how we forecast time series using data. However, financial analysis has witnessed limited success from these advancements due to the unique complexity of market data. In this work, we aim to address this gap. In it, we first identify two core reasons for this: insufficient financial data in existing pre-trained corpora and negative transfer across domains, where mixing from different time-series domains actually degrades the performance on downstream tasks.

To overcome these issues, we introduce Delphyne, a pre-trained model specifically designed for financial time-series tasks. We use Bloomberg’s financial data, along with general time-series datasets for training, and incorporate several architectural modifications to handle the continuous, noisy, multivariate and multifrequency nature of financial data.

Delphyne achieves superior performances on various financial tasks, including volatility modeling and nowcasting, and also shows competitive performance on different time-series tasks performed on publicly available datasets.

How does your research advance the state-of-the-art in the field of time-series forecasting?

Our research demonstrates the presence of negative transfer in the context of time-series data, especially when pre-trained with data from different domains, essentially contrasting them with LLMs used for language tasks. It emphasizes that the strength of a pre-trained time-series model lies in its capability to quickly adapt (fine-tune) to new downstream tasks by efficiently “unlearning” pre-training biases and “adapting” to the specific characteristics of the downstream task based on the diverse domains encountered during pre-training. Therefore, adding financial time-series into Delphyne’s pre-training corpora significantly boosts its performance on various downstream financial tasks, especially after fine-tuning.