Bloomberg’s AI Group Publishes 3 Research Papers at NAACL 2024

June 17, 2024

At the 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL 2024) in Mexico City, researchers from Bloomberg’s AI Engineering group are showcasing their expertise in natural language processing (NLP) and computational linguistics by publishing three papers across the conference’s Industry Track and in Findings of the Association for Computational Linguistics: NAACL 2024. Through these papers, the authors and their collaborators highlight a variety of NLP applications, novel approaches and improved models used in key tasks, and other advances to the state-of-the-art in the field of computational linguistics.

We asked some of our authors to summarize their research and explain why their results were notable:

Modeling and Detecting Company Risks from News

Jiaxin Pei (University of Michigan, Ann Arbor), Soumya Vadlamannati (Bloomberg), Liang-Kang Huang (Bloomberg), Daniel Preoţiuc-Pietro (Bloomberg), Xinyu Hua (Bloomberg)

Oral Presentations (Tuesday, June 18 @ 9:00 AM–10:30 AM CST)

Please summarize your research. Why are your results notable?

Soumya: In his book “Risk Assessment: Theory, Methods, and Applications,” Marvin Rausand, a Professor Emeritus at Norwegian University of Science and Technology (NTNU) and an expert on reliability and risk analysis, explained that, in finance and corporate operations, ‘risks’ refer to the factors that may harm a company or cause it to fail. Identifying risks associated with a specific company or industry is important to financial professionals. Publicly listed companies are required to disclose risks through their financial filings (e.g., 10-K, 10-Q), annual reports, and shareholder meetings. But these formal disclosures occur only periodically. Computational modeling of risk factors could better inform analysts, investors, and policymakers in a more timely, real-time manner.

Our work introduces a computational framework to detect such risks from news articles. We first propose a new taxonomy of seven distinct risk factors: (1) Supply Chain and Product, (2) People and Management, (3) Finance, (4) Legal and Regulations, (5) Macro, (6) Markets and Consumers, and (7) Competition. Using this schema, we sample and annotate 744 news articles from Bloomberg News. Given this dataset, we benchmark common machine learning models, including state-of-the-art LLMs (e.g., LlaMA2). Our experiments show that fine-tuning transformers on domain-specific data yields significantly better performance.

Finally, we apply the best model to analyze more than 277,000 Bloomberg News articles published between 2018 to 2022. Our results show that modeling company risks can not only reveal important signals about companies’ operations, but also indicate macro-level risks in society.

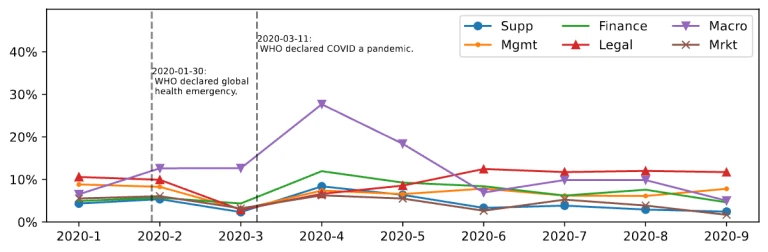

For example, in the figure below, we see that COVID introduced nearly all types of risk factors for companies.

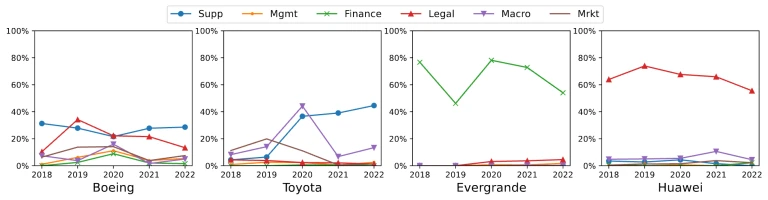

We also observe different risks for companies in different sectors and categories (private vs. publicly traded).

How does your research advance the state-of-the-art in the field of natural language processing?

Existing research on risk prediction focuses on company filings, which are limited to public companies and are limited in frequency (e.g., quarterly). Our framework targets news articles that have none of the above limitations, and provide more diverse perspectives. Following a manual inspection of hundreds of news articles, we propose a new taxonomy of risk categories by combining existing literature with Bloomberg’s collective domain knowledge about the financial markets. We also differentiate our task from sentiment analysis by showing that positive and neutral news can also mention companies’ risk factors.

We benchmark models of varying sizes and complexities, ranging from simple classifiers with linguistic features to state-of-the-art LLMs with prompt engineering. We show that fine-tuning a smaller Transformer model pre-trained on Bloomberg News far outperforms general LLMs that lack in-domain fine-tuning. The gain in performance through fine-tuning also shows that the task requires knowledge not obtained in typical instruction-tuning and pre-training datasets and can benefit from further exploration. Finally, as illustrated in the charts above, the extensive analysis we performed on Bloomberg News articles uncovered some interesting patterns in the distribution of risk factors across companies and industries.

Leveraging Contextual Information for Effective Entity Salience Detection

Rajarshi Bhowmik, Marco Ponza, Atharva Tendle, Anant Gupta, Rebecca Jiang, Xingyu Lu, Qian Zhao, Daniel Preoţiuc-Pietro

Poster Session 6 (Wednesday, June 19 @ 11:00 AM–12:30 PM CST)

Please summarize your research. Why are your results notable?

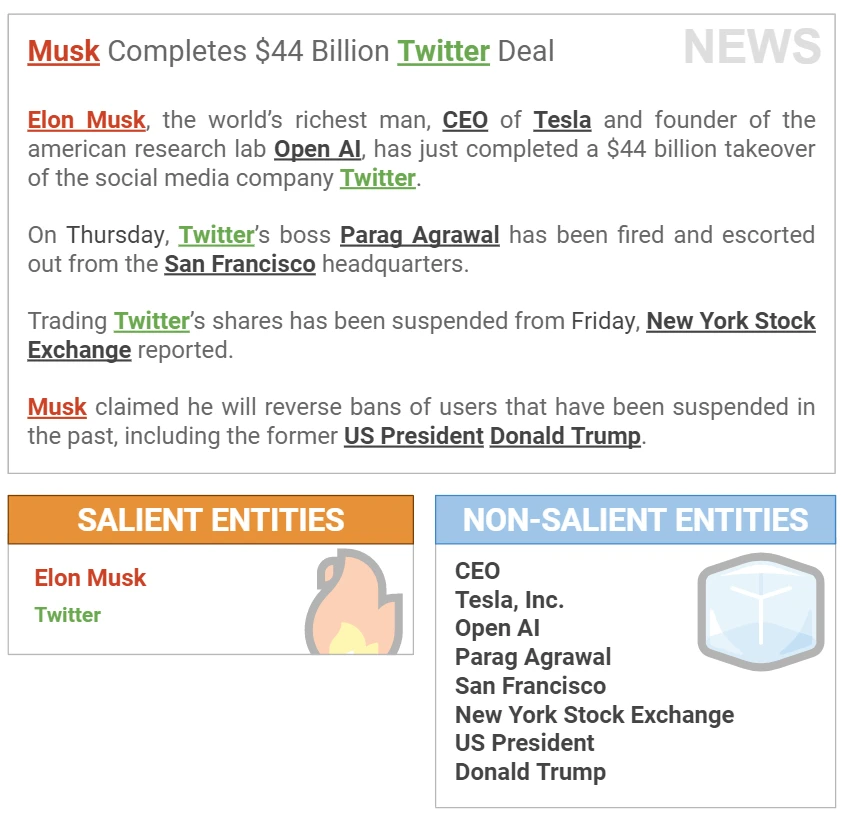

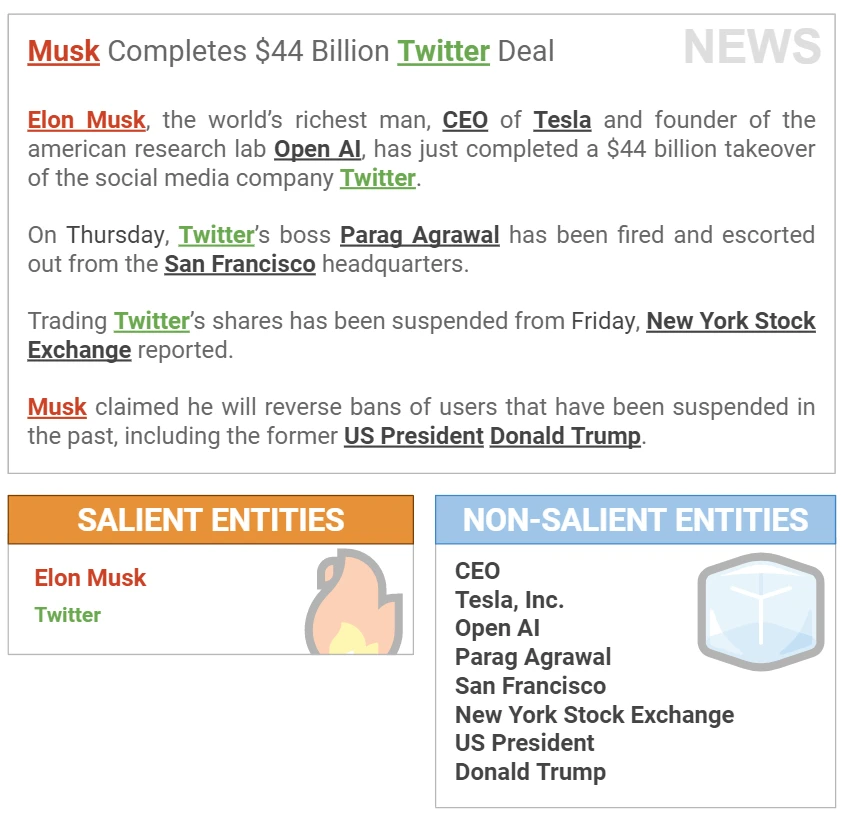

Rajarshi: The presence of entities is ubiquitous in text documents, such as news articles. However, the content of each document often revolves around a handful of entities, while the other entities mentioned play only peripheral roles. Often called salient entities, these central entities are crucial for understanding the content from a reader’s perspective. Precise automatic detection of salient entities can potentially help in improving many downstream applications, including improving entity search experience of the users by filtering out the documents in which the searched entity is non-salient, summarizing the content of a document with respect to the salient entities, trend analysis of the salient entities, etc.

Our work aims to extract these salient entities from a document using Transformer-based language models. We conducted a comprehensive benchmarking of four publicly available datasets and showed the superior performance of our model compared to existing models in published literature. Interestingly, we show that zero-shot prompting of instruction-tuned LLMs yields significantly inferior results. Our observation highlights the unique and complex nature of the entity salience detection task, which differs from the tasks on which these large language models were instruction-tuned.

non-salient entities. Entity mentions are highlighted in

text.

How does your research advance the state-of-the-art in the field of natural language processing?

Prior work on salient entity detection mainly focused on machine learning models that require heavy feature engineering. We show that fine-tuning medium-sized language models with a cross-encoder style architecture yields substantial performance gains over feature engineering approaches.

Another key contribution of our work is establishing a new comprehensive benchmark of four publicly available datasets. By establishing these benchmarks, we pave the way for future research to evaluate their models against a consistent baseline. This will facilitate progress in this core natural language processing task.

We also perform stratified analysis of our model’s performance across several critical features. This in-depth analysis provides insights into the model’s behavior and is crucial for future research directions.

Make it happen here.

Non-contrastive sentence representations via self-supervision

Duccio Pappadopulo and Marco Farina

Poster Session 6 (Wednesday, June 19 @ 11:00 AM–12:30 PM CST)

Please summarize your research. Why are your results notable?

Marco: Encoding sentences into fixed-length numerical vectors is a fundamental technique in NLP. Text embeddings can be used to measure the semantic similarity between sentences and as features in a variety of algorithms aimed at solving tasks like retrieval, clustering, and classification.

Self-supervised representation learning is widely used to learn effective sentence embeddings. One of the problems that such algorithms need to solve is to avoid the collapse of the learned representation to a single constant vector. Methods that avoid collapse have been classified as:

- Sample contrastive (like SimCSE), in which collapse is avoided by penalizing the similarity of pairs corresponding to different data points.

- Dimension contrastive (like Barlow Twins and VICReg), in which collapse is avoided through the decorrelation of the embedding across their dimensions by requiring a minimal value for their variance.

Our paper conducts a thorough comparison of these two classes of methods to learn unsupervised text embeddings. We explore different augmentation strategies and we evaluate the performance of these methods on downstream tasks. Our findings reveal that dimension contrastive techniques, particularly Barlow Twins, exhibit competitive or superior performance than SimCSE, highlighting the importance of dimension contrastive methods in NLP tasks. In addition, we provide insights into two common metrics (alignment and uniformity) that are commonly considered when analyzing sample contrastive embedding techniques.

How does your research advance the state-of-the-art in the field of natural language processing?

Our paper advances the state-of-the-art in the field of NLP by examining the efficacy of dimension contrastive methods to learn text embeddings. While these techniques have been used in computer vision, they are almost unexplored in NLP.

We show empirically that dimension contrastive techniques, such as Barlow Twins, lead to competitive results when compared with sample contrastive ones like SimCSE. In this way, our paper expands the toolbox of self-supervised learning objectives available to NLP researchers and practitioners. Furthermore, our exploration of different augmentation strategies contributes to a deeper understanding of how to effectively leverage unsupervised textual representation learning.