Bloomberg’s AI Engineering Group Publishes 4 NLP Research Papers at AACL-IJCNLP 2022

November 20, 2022

During the 2nd Conference of the Asia-Pacific Chapter of the Association for Computational Linguistics and the 12th International Joint Conference on Natural Language Processing (AACL-IJCNLP 2022) online this week, researchers from Bloomberg’s AI Engineering Group are showcasing their expertise in natural language processing (NLP) and computational linguistics by publishing 4 papers across the main conference and the 1st Workshop on Scaling Up Multilingual Evaluation (SUMEval 2022). Through these papers, the authors and their collaborators highlight a variety of NLP applications, novel approaches and improved models used in key tasks, and other advances to the state-of-the-art in the field of computational linguistics.

We asked some of the authors to summarize their research and explain why the results were notable:

Extractive Entity-Centric Summarization as Sentence Selection using Bi-Encoders

Ella Hofmann-Coyle (Bloomberg), Mayank Kulkarni (work done while at Bloomberg), Lingjue (Jane) Xie (Bloomberg), Mounica Maddela (Georgia Institute of Technology) and Daniel Preoţiuc-Pietro (Bloomberg)

Poster Session 1 (Tuesday, November 22, 2022 @ 12:20-3:00 PM CST (Taipei)

Please summarize your research. Why are your results notable?

Ella: Summarization involves producing a concise, coherent and factual synopsis of a text document. Summarization can be controlled for different characteristics or to have a specific focus. One such focus is to produce a summary with respect to a particular entity.

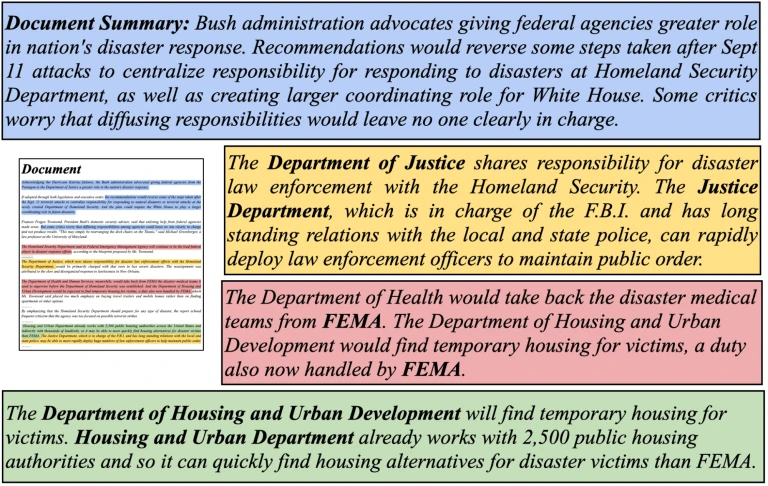

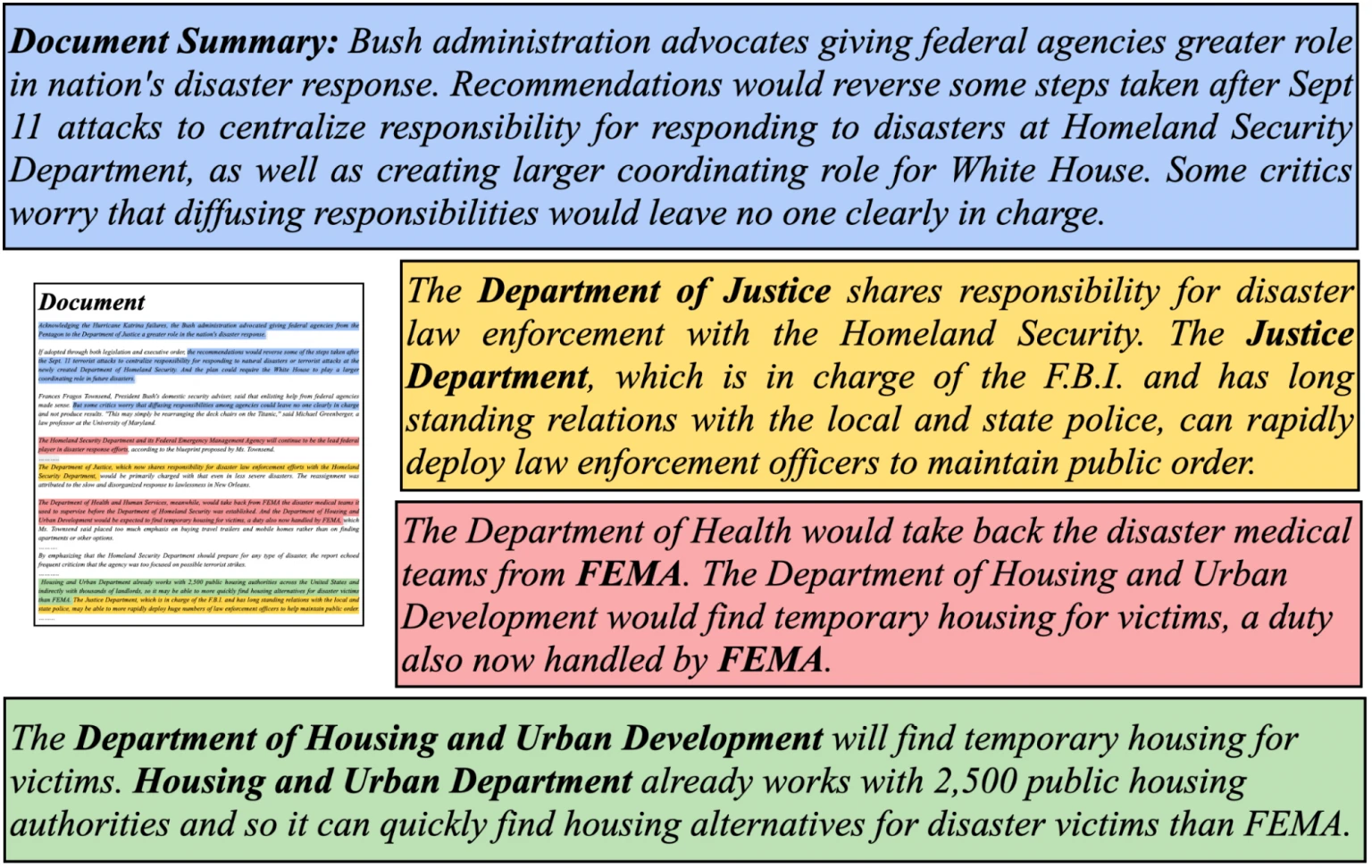

We focus on extractive summarization, which involves utilizing the content of the source document to create the summary. This is in contrast to abstractive summarization, which can generate a summary from scratch.

Previously, extractive entity-centric summarization was a relatively unexplored task due to the lack of entity-centric sentence-level annotations. This was mitigated in our past work at ACL 2022, where we introduced the EntSUM dataset, the first dataset to provide entity-level sentence salience and summary sentence information. Building upon the EntSUM dataset, we frame our summarization problem as a sentence selection problem. We propose a new state-of-the-art method for this task, using a bi-encoder architecture for sentence selection, where the inputs to the model are sentences from the document provided one at a time and the target entity.

How does your research advance the state-of-the-art in the field of natural language processing?

Jane: Our paper presents the first in-depth study of extractive entity-centric summarization methods and establishes a new benchmark for this task. Our proposed methods – using the bi-encoder framework with pretrained Transformer-based models – substantially outperforms previous controllable extractive summarization methods and competitive heuristics, such as selecting the first three sentences about the entity in a document as its summary. Our analysis on model behavior shows that future work can investigate how best to build entity representations, custom loss functions for this task, and joint sentence selection across the entire document.

We hope our work will inspire more research in entity-centric summarization that can facilitate applications such as faceted news retrieval or downstream applications like entity-centric sentiment prediction. Extractive summarization may be preferred for certain applications, as it is less likely to produce non-factual summaries. Plus, evaluation does not suffer as much from issues present when evaluating abstractive summarization methods.

Make it happen here.

Cross-lingual Few-Shot Learning on Unseen Languages

Genta Indra Winata (Bloomberg), Shijie Wu (Bloomberg), Mayank Kulkarni (work done while at Bloomberg), Thamar Solorio (Bloomberg) and Daniel Preoţiuc-Pietro (Bloomberg)

Poster Session 1 (Tuesday, November 22, 2022 @ 12:20-3:00 PM CST (Taipei)

Please summarize your research. Why are your results notable?

Genta: Large pre-trained language models (LMs) have demonstrated the ability to obtain good performance on downstream tasks. Recent research into few-shot learning approaches proposed methods that explicitly aim to improve performance when few annotated data points are available to perform a task on low-resource languages. However, this was mostly studied for relatively resource-rich languages, where at least enough unlabeled data is available to be included when pre-training a multilingual language model.

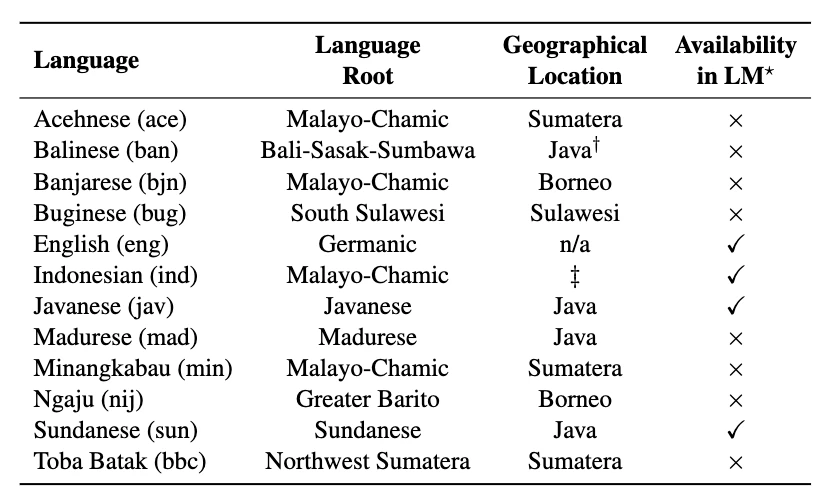

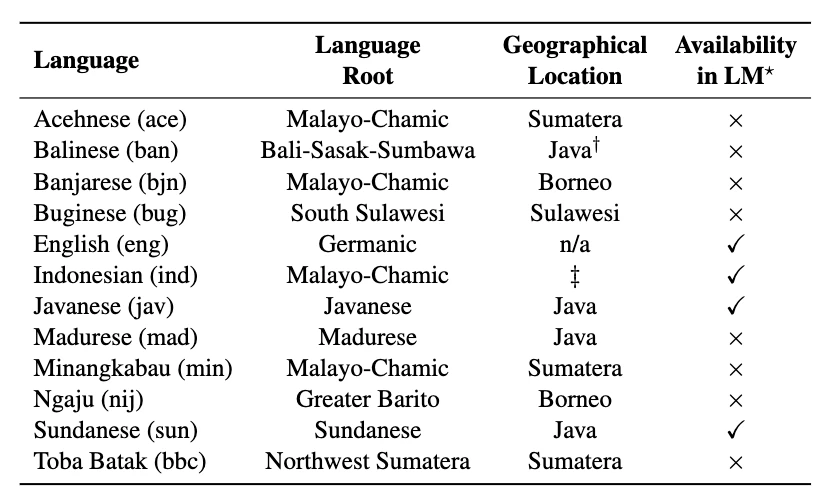

In this paper, we explore the problem of cross-lingual transfer in unseen languages, where no unlabeled data is available for pre-training a model and different languages are used in training and evaluation. As shown in Table 1, we use a downstream sentiment analysis task across 12 diverse languages, including eight unseen languages from the NusaX dataset, to analyze the effectiveness of several few-shot learning strategies across the three major types of model architectures and their learning dynamics. Our findings contribute to the body of knowledge on cross-lingual models for low-resource settings that is paramount to increasing coverage, diversity, and equity in access to NLP technology.

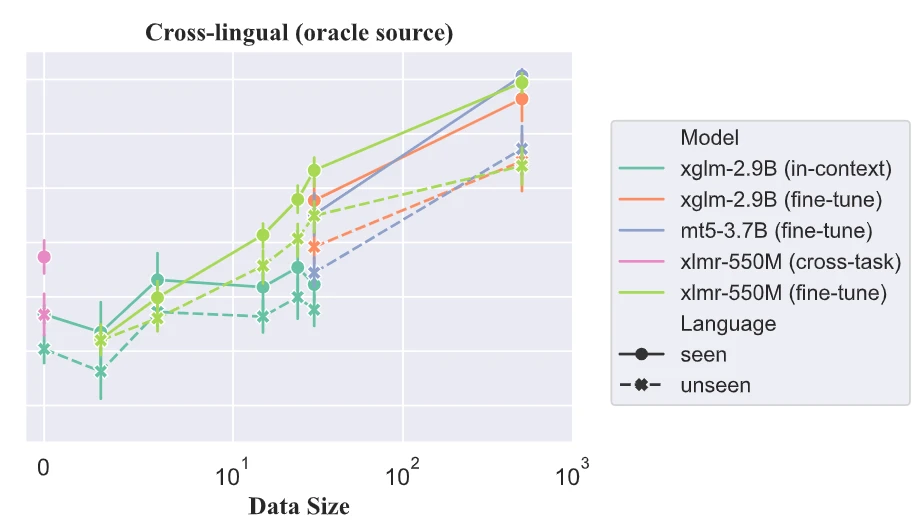

We compare different model architectures and show that the encoder-only model, XLM-R, gives the best downstream task performance shown in Figure 1.

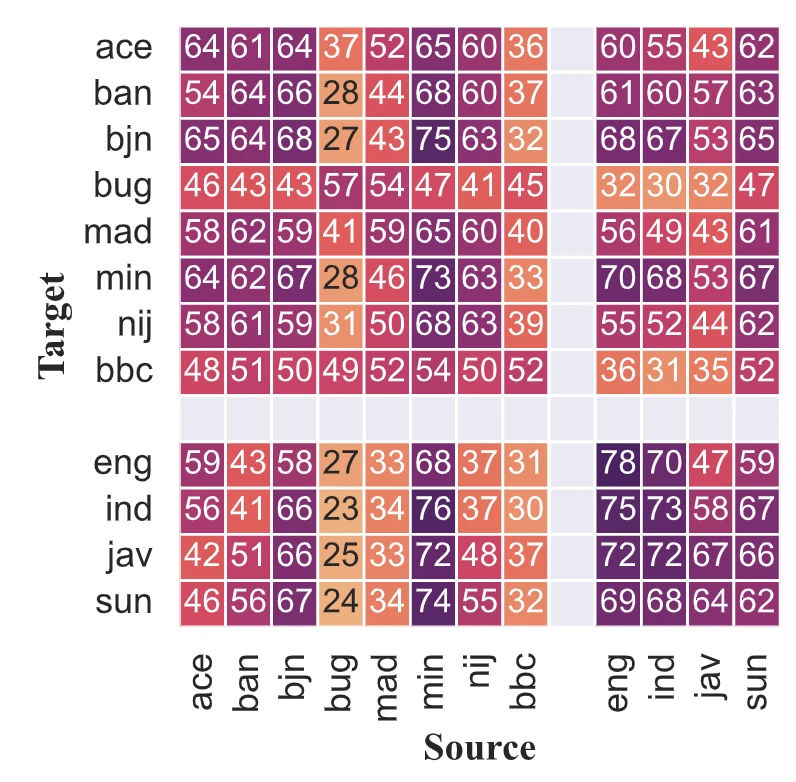

We also compare strategies for selecting languages for transfer and contrast findings across languages seen in pre-training compared to those that are not. Figure 2 provides a window into the performance metrics in the 30-shot setting on XLM-R large. In general, XLM-R can still adapt to unseen Indonesian languages since there are some overlapping subwords with the seen languages.

How does your research advance the state-of-the-art in the field of natural language processing?

We present the first comprehensive study to measure the effectiveness of few-shot in-context learning and fine-tuning approaches with multilingual LMs on languages that have never been seen during pre-training, and have never been explored before. We find that fine-tuning the multilingual encoder model (i.e., XLM-R) is generally the most effective method when we have more than 15 samples; otherwise, zero-shot cross-task is preferable. We also observe that in-context learning has a relatively higher variance than fine-tuning, and mixing multiple source languages is a promising approach when the number of training examples in each language is limited.

We hope this research will benefit those practitioners and researchers who apply pre-trained multilingual LMs to new languages that were originally not supported by large LMs, something which is paramount to increasing coverage, diversity, and equity in access to NLP technology.

Enhancing Tabular Reasoning with Pattern Exploiting Training

Abhilash Reddy Shankarampeta (IIT Guwahati), Vivek Gupta (University of Utah) and Shuo Zhang (Bloomberg)

Poster Session 1 (Tuesday, November 22, 2022 @ 12:20-3:00 PM CST (Taipei)

Please summarize your research. Why are your results notable?

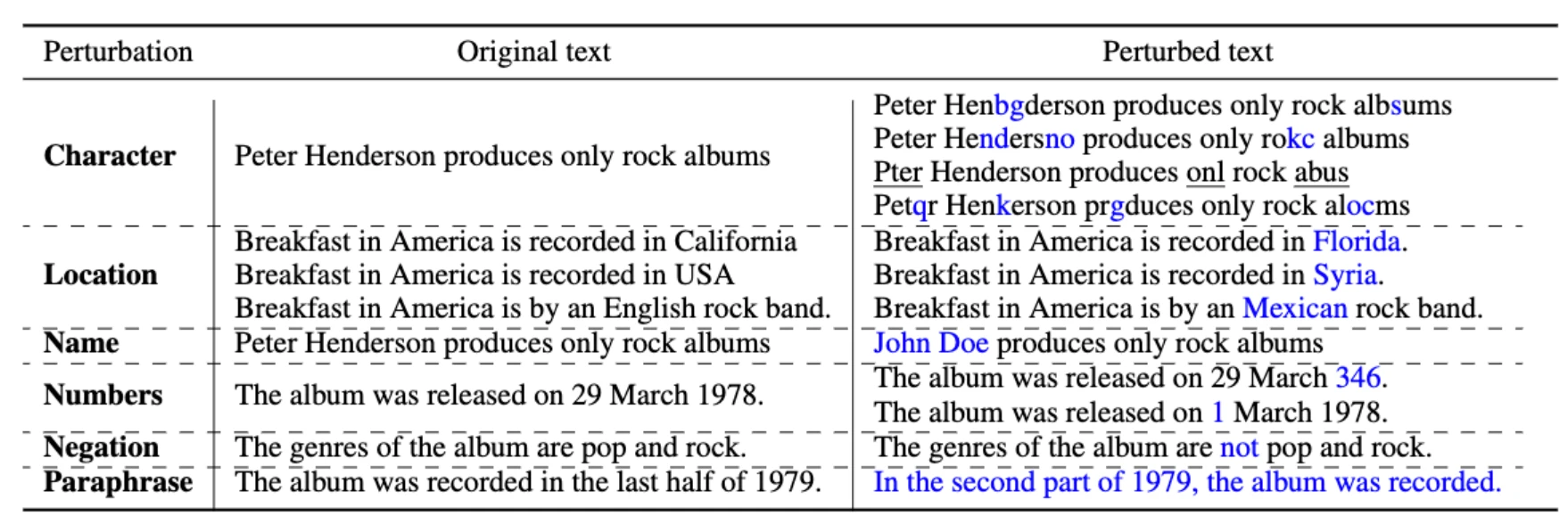

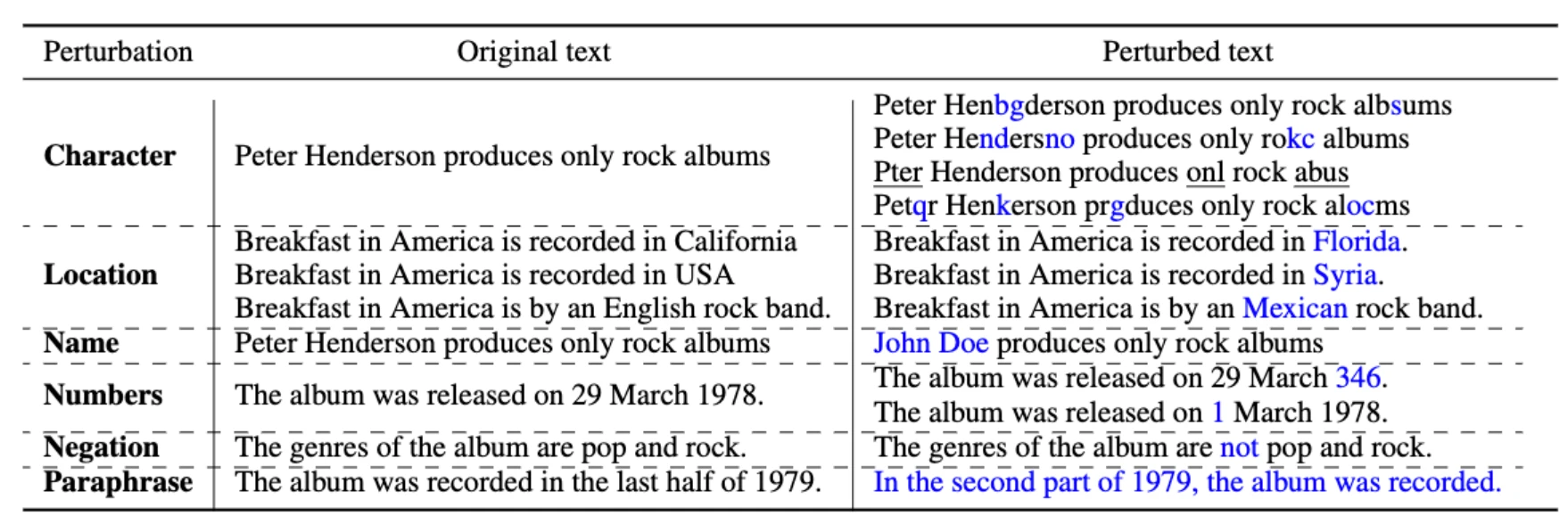

Shuo: Recent approaches based on pre-trained language models have demonstrated higher performance over tabular tasks (such as tabular reasoning), while exhibiting inherent issues, including: not employing the proper evidence and inconsistent predictions across inputs while reasoning over the tabular data. Through this study, we improve the pre-existing knowledge and reasoning capabilities of these tabular reasoning models using Pattern-Exploiting Training (PET) (i.e., strategic MLM) on pre-trained language models. Compared to current baselines, our improved model demonstrates a higher comprehension of knowledge facts and tabular reasoning. We also show that such models are superior for the underlying downstream tabular inference tasks on INFOTABS. We also demonstrate the resistance of our model to adversarial sets produced by various character- and word-level perturbations. The example perturbations are illustrated in Table 1.

How does your research advance the state-of-the-art in the field of natural language processing?

The challenge of classifying a hypothesis as entailment, contradiction, or neutral depending on the provided premise is known as Natural Language Inference (NLI). Large language models, such BERT and RoBERTa, have demonstrated performance on par with that of humans. However, the existing methods based on language models are ineffective for reasoning over semi-structured data, such as tables. These models often ignore relevant rows and use spurious correlations in hypotheses or pre-training information for making inferences. Models trained on such data frequently lack generalizability and robustness due to biases present in human-curated datasets with annotation artifacts. Furthermore, thorough model evaluation is hampered by the lack of test sets. As a result, assessing models just based on accuracy does not adequately capture their dependability and robustness.

This paper explores a solution via prompt learning, i.e., Pattern-Exploiting Training, which entails establishing pairs of cloze question patterns and verbalizers that enable subsequent tasks to utilize the knowledge of the pre-trained models. Our extensive experiments show that our strategy can preserve knowledge and improve tabular NLI performance. PET is an efficient training method for enabling large-language models to address reasoning tasks on semi-structured data since it does not require model upgrades, such as adding extra layers or parameters during pre-training.

IndoRobusta: Towards Robustness Against Diverse Code-Mixed Indonesian Local Languages

Muhammad Farid Adilazuarda (Institut Teknologi Bandung), Samuel Cahyawijaya (The Hong Kong University of Science and Technology), Genta Indra Winata (Bloomberg),

Pascale Fung (The Hong Kong University of Science and Technology) and Ayu Purwarianti (Institut Teknologi Bandung)

SumEval Workshop (Sunday, November 20, 2022 @ 2:55-3:10 AM CST (Taipei)

Please summarize your research. Why are your results notable?

Genta: In the bilingual or multilingual communities, it is very common for people to alternate languages during conversation, a behavior known as code-switching. This phenomenon has been an issue in modeling, since language models’ performance tends to drop when there are mixed languages in the input.

Significant progress has been made on low-resource languages on NLP, but the exploration of the code-mixing is limited, despite many languages being mixed frequently in daily conversation, especially in Indonesia, where more than 700 languages and dialects are spoken. In this paper, we study code-mixing phenomena in Indonesian languages that are commonly mixed with other languages, such as English, Sundanese, Javanese, and Malay.

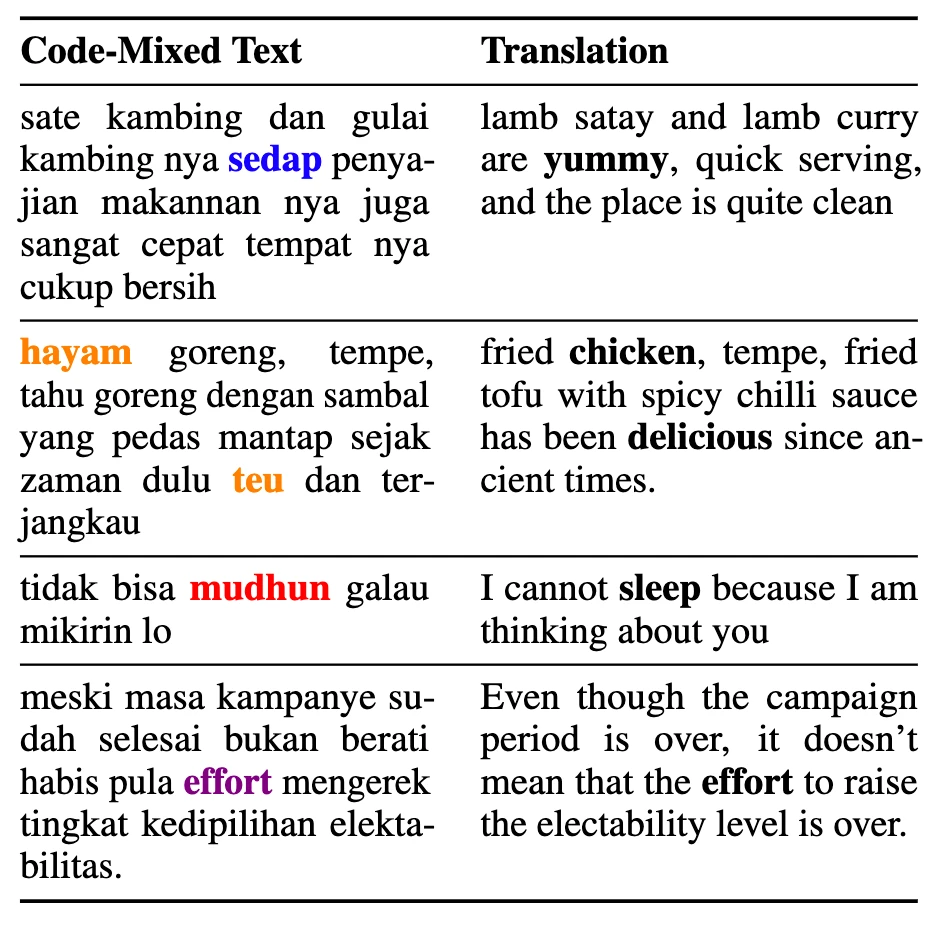

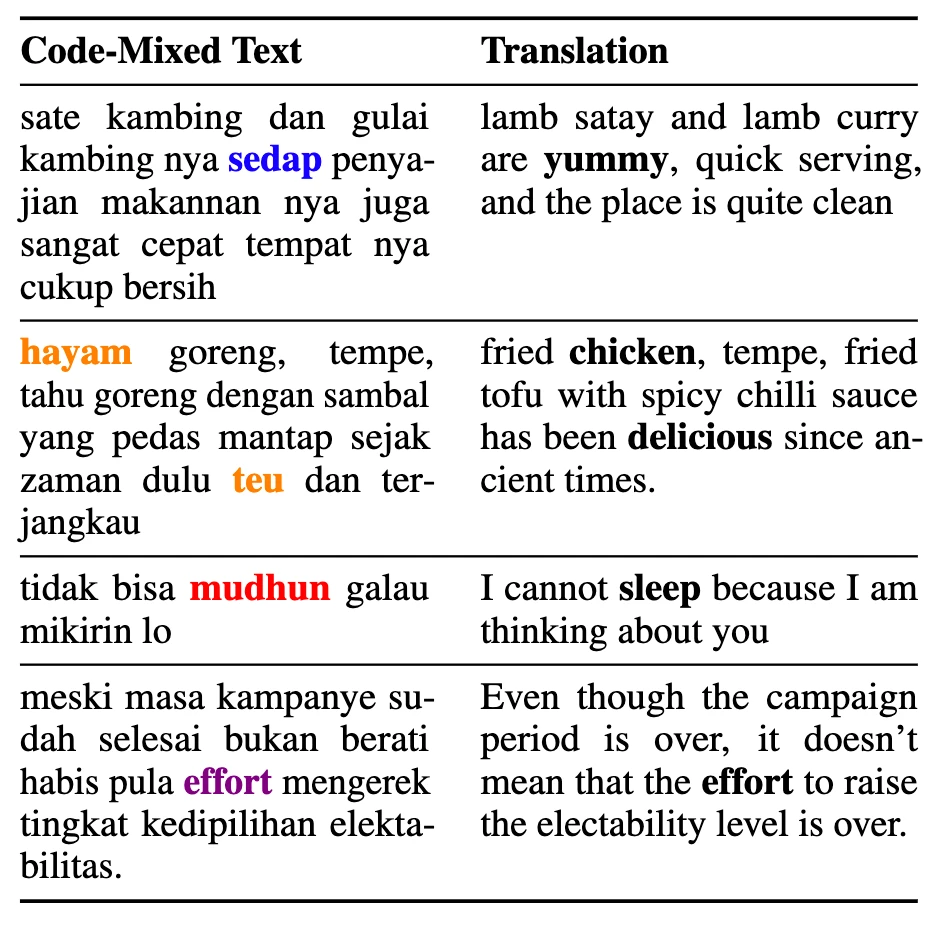

We introduce IndoRobusta, a framework to evaluate and improve the code-mixing robustness by simulating the code-mixing text and propose IndoRobusta-Blend and IndoRobusta-Shot. IndoRobusta-Blend is a code-mixing robustness evaluation method that involves two steps: 1) code-mixed dataset generation and 2) model evaluation on the code-mixed dataset. The first step is synthetically generating the code-mixed example using the translation of important words in a sentence, as shown in Table 1.

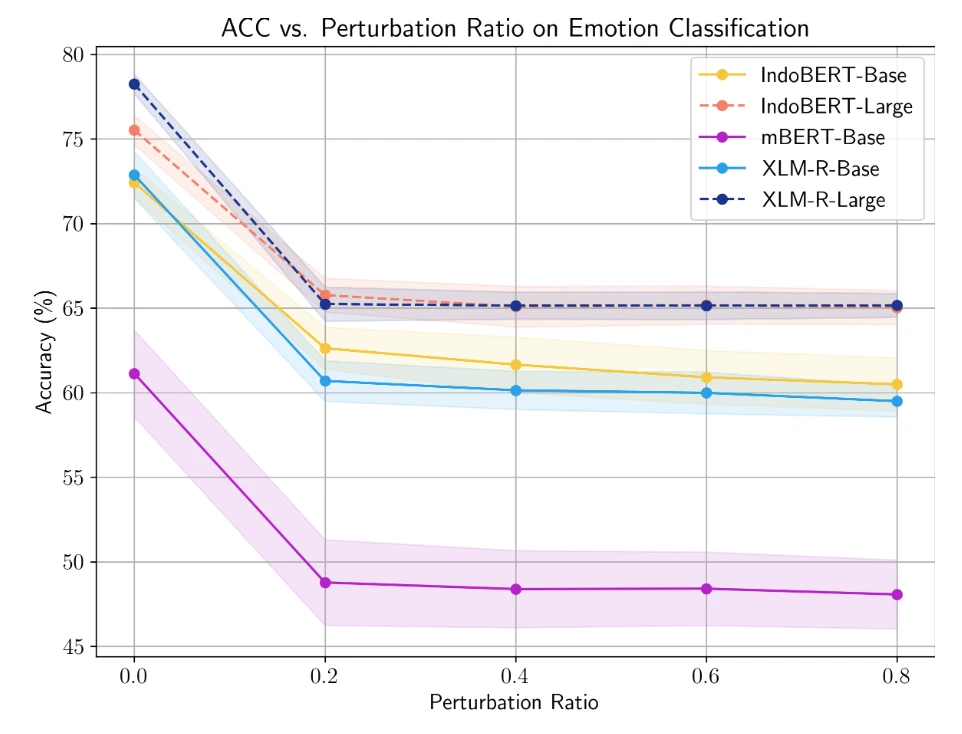

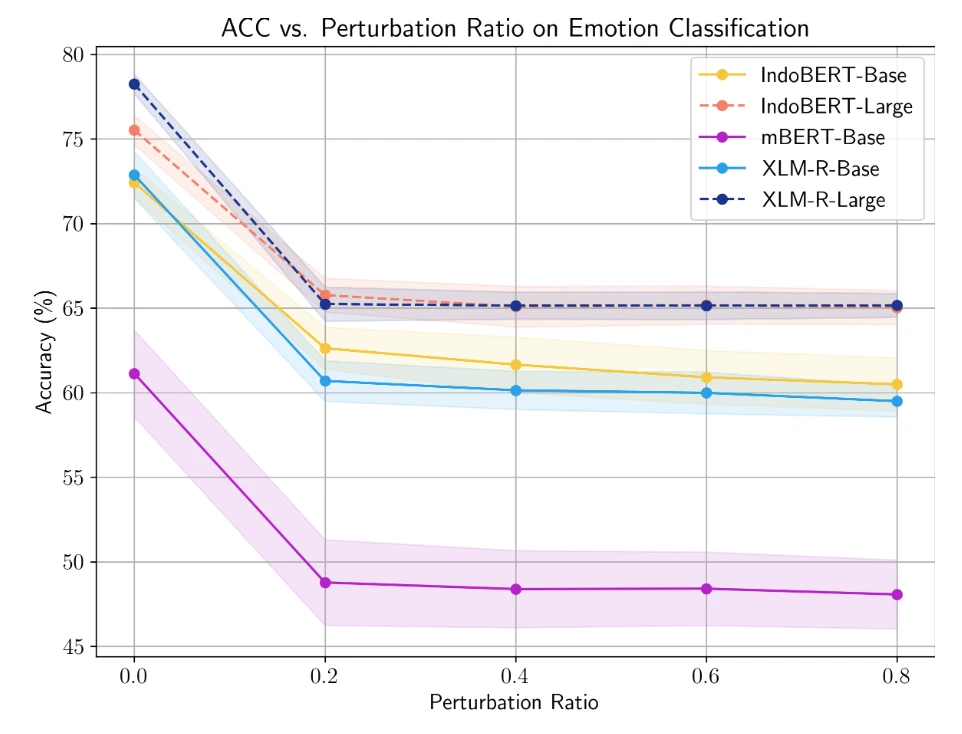

Then, we evaluate the code-mixed example on the downstream NLP tasks. We found that the pre-trained language model (LM) performance dropped significantly after we inserted embedded language to Indonesian text as shown in Table 2.

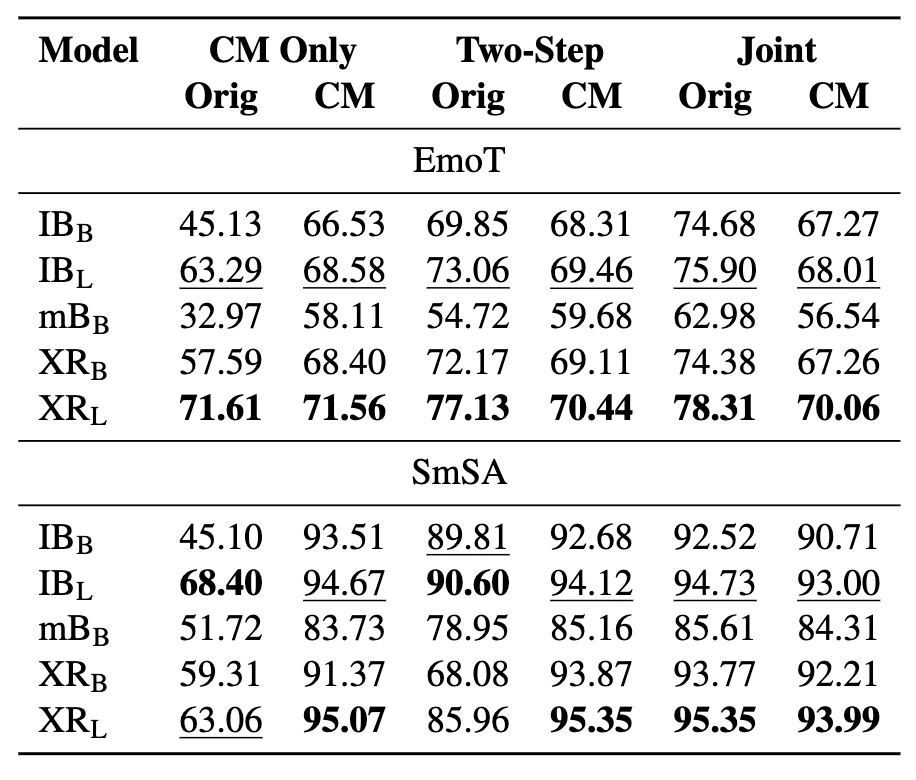

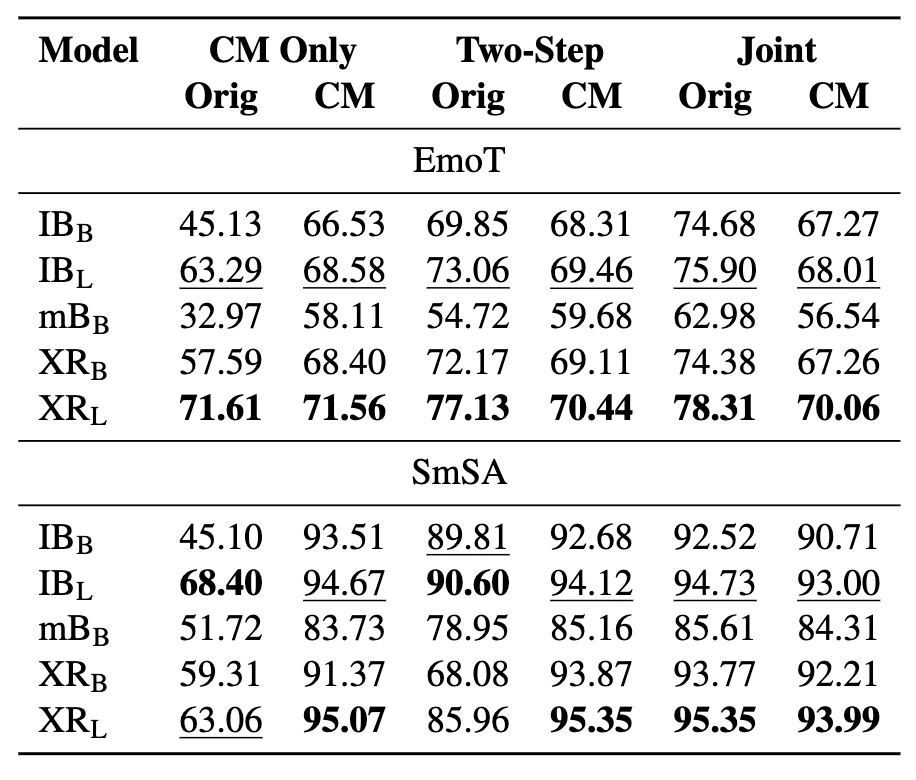

To alleviate this performance drop on code-mixed text, we propose IndoRobusta-Shot, a code-mixing adversarial defense method, which aims to improve the robustness of the model. IndoRobusta-Shot does so by fine-tuning the model on the generated code-mixed dataset through two-step and joint training. Table 3 shows significant results on the defense.

How does your research advance the state-of-the-art in the field of natural language processing?

We introduce IndoRobusta, a framework to effectively evaluate and improve model robustness that can be very useful for evaluating code-mixed text. Our results suggest that adversarial training can significantly improve the code-mixing robustness of LMs, while improving the performance on the monolingual data at the same time. Our analysis shows that the pre-training corpus bias affects the model’s ability to better handle Indonesian-English code-mixing when compared to other local languages, despite having higher language diversity. We hope this study could be very useful for applications that use bilingual and multilingual text dominantly and also include code-mixing.