Bloomberg’s AI Engineering Group & CTO Office Publish 4 NLP Research Papers at EACL & ICLR 2023

May 01, 2023

During the 17th Conference of the European Chapter of the Association for Computational Linguistics (EACL 2023) in Dubrovnik, Croatia this week, researchers from Bloomberg’s AI Engineering Group and CTO Office are showcasing their expertise in natural language processing (NLP) by publishing three papers, one of which will appear in Findings of EACL 2023. A fourth paper is being published at the 11th International Conference on Learning Representations (ICLR 2023) in Kigali, Rwanda.

Through these papers, the authors and their collaborators highlight a variety of NLP applications, novel approaches and improved models used in key tasks, and other advances to the state-of-the-art in the field of computational linguistics.

We asked some of the authors to summarize their research and explain why the results were notable:

Towards a Unified Multi-Domain Multilingual Named Entity Recognition Model

Mayank Kulkarni (Amazon Alexa AI), Daniel Preoţiuc-Pietro, (Bloomberg), Karthik Radhakrishnan (Bloomberg), Genta Winata (Bloomberg), Shijie Wu (Bloomberg), Jane Xie (Bloomberg), Shaohua Yang (work done while at Bloomberg)

EACL 2023

- Poster Session 3 (May 3, 2023 @ 9:00 AM CEST)

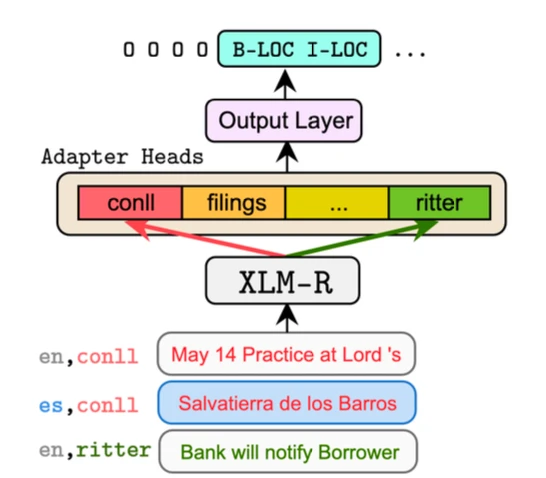

Karthik: Named Entity Recognition (NER) is the key Natural Language Processing (NLP) task of finding mentions of companies or people in written text. The performance of models trained for these tasks are sensitive to the genre (e.g., news articles or company filings) and language of the text. We explore several knowledge sharing techniques on top of Transformer-based encoders, such as data pooling and Mixture-of-Experts methods, and language or domain-specific adapter heads.

Our experiments highlight multiple nuances to consider while building a unified model, including that naive data pooling fails to obtain good performance, that domain-specific adaptations are more important than language-specific ones, and that including domain-specific adaptations in a unified model can help reach performance close to that when training multiple dedicated monolingual models, but at a fraction of their parameter count.

Contrary to past research in cross-lingual NER, we show that Mixture-of-Experts offers limited gains over naive data pooling methods. We find that performing domain adaptation using adapter heads achieves a good trade-off between performance and parameter count and could represent the optimal solution in deploying a unified model. The following figure demonstrates our proposed unified model’s architecture.

Jane: Named Entity Recognition (NER) is a key task that is used as a first-step in many NLP solutions. Having a unified NER model that can perform well across multiple genres and languages is more practical and efficient and could be more performant, as it could leverage commonalities on how entities are mentioned across genres or languages. This paper is the first attempt to build a unified multi-domain multilingual NER model by conducting experiments across 13 domains and 4 languages. We hope the insights presented by our paper provide strong baselines for this new task and pave the way for further research into Multi-domain Multilingual NER.

Make it happen here.

Distillation of encoder-decoder transformers for sequence labeling

Marco Farina (Bloomberg), Duccio Pappadopulo (Bloomberg), Anant Gupta (Bloomberg), Leslie Huang (Bloomberg), Ozan İrsoy (Bloomberg), Thamar Solorio (Bloomberg/University of Houston)

Findings of EACL 2023

- Findings (May 2, 2023 @ 6:15 PM CEST)

Please summarize your research. Why are your results notable?

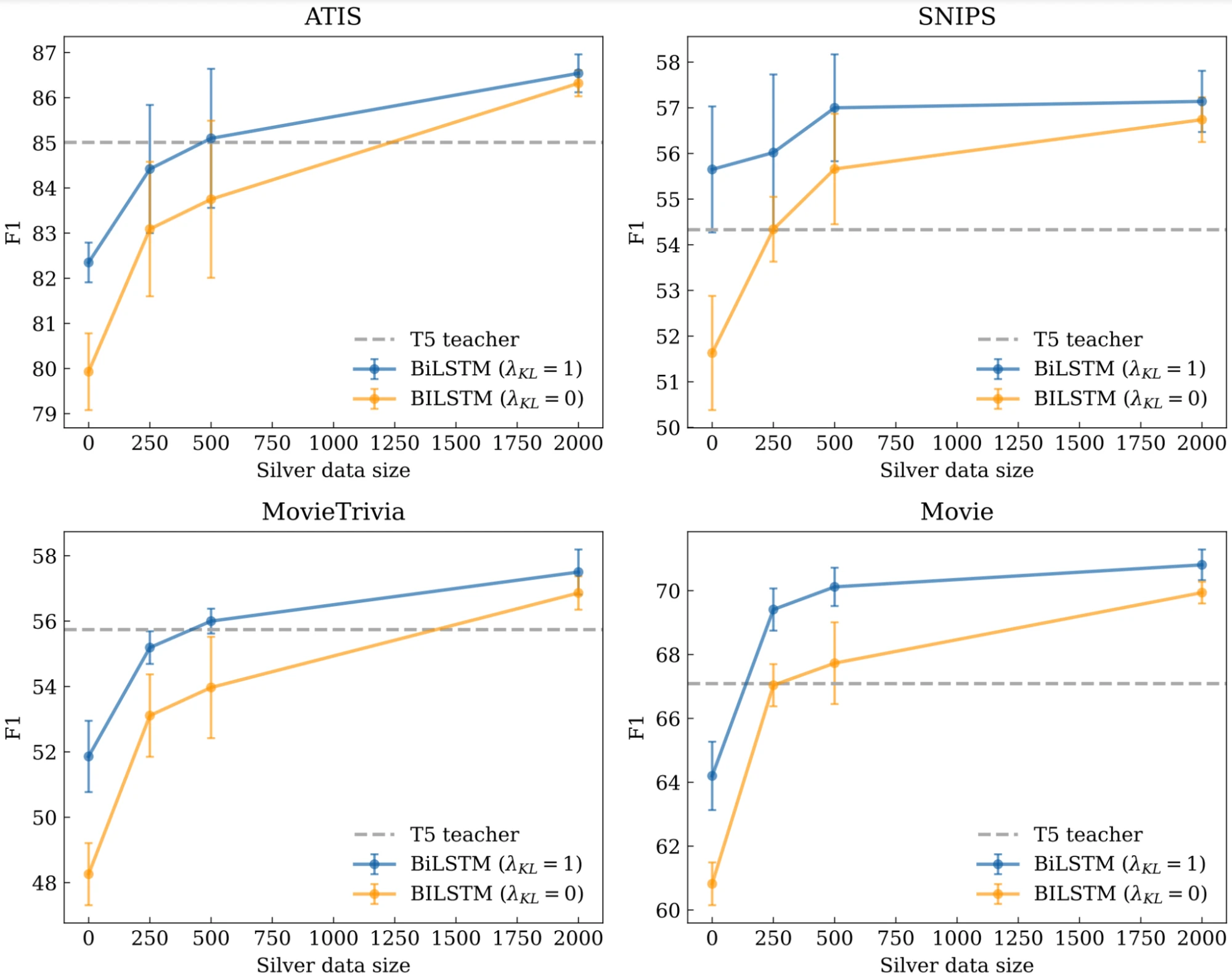

Leslie: The field of NLP is experiencing an accelerated race to develop larger and more powerful language models. However, training such models requires enormous amounts of labeled data. Another disadvantage is that these models are slow and require substantial computing resources at inference time. These tradeoffs underscore the need for practical distillation approaches that can leverage the knowledge acquired by large language models (LLMs) in a compute-efficient manner.

Keeping these constraints in mind, we build on recent work to propose a hallucination-free framework for sequence tagging that is especially suited for distillation. Our inference algorithm, SenTScore, combines a target sequence format and a scoring mechanism for decoding. Our paper shows empirical results of new state-of-the-art performance across multiple sequence labeling datasets and validates the usefulness of SenTScore for distilling a large model in a few-shot learning scenario.

How does your research advance the state-of-the-art in the field of natural language processing?

Sequence labeling is a core NLP task that is embedded within many real-world NLP pipelines. Our algorithm, SenTScore, allows for the distillation of arbitrarily large encoder-decoder Transformer models into more compact model architectures. This is possible because our algorithm allows for knowledge distillation, not just distillation with pseudo-labels. Our results demonstrate that SenTScore achieves better performance across a majority of seven benchmark datasets when compared with the prior state-of-the-art (an alternative sequence format with a substantially larger model with 580 million parameters, as compared to our 220 million parameter model). Additionally, our results show that distilling a small model with SenTScore can achieve impressive results — outperforming even the teacher model.

NusaX: Multilingual Parallel Sentiment Dataset for 10 Indonesian Local Languages

Genta Winata (Bloomberg), Alham Fikri Aji, Samuel Cahyawijaya, Rahmad Mahendra, Fajri Koto, Ade Romadhony, Kemal Kurniawan, David Moeljadi, Radityo Eko Prasojo, Pascale Fung, Timothy Baldwin, Jey Han Lau, Rico Sennrich, Sebastian Ruder

EACL 2023

- Poster Session 3 (May 3, 2023 @ 9:00 AM CEST)

- Oral Language Resources and Evaluation 2 (May 3, 2023 @ 12:15 PM CEST)

Please summarize your research. Why are your results notable?

Genta: Natural language processing (NLP) is having a significant impact on society via technologies such as machine translation and search engines. Despite its success, NLP technology is only widely available for high-resource languages – languages such as English and Mandarin Chinese for which many texts and supervised data exist – and remains inaccessible to many languages due to the unavailability of data resources and benchmarks.

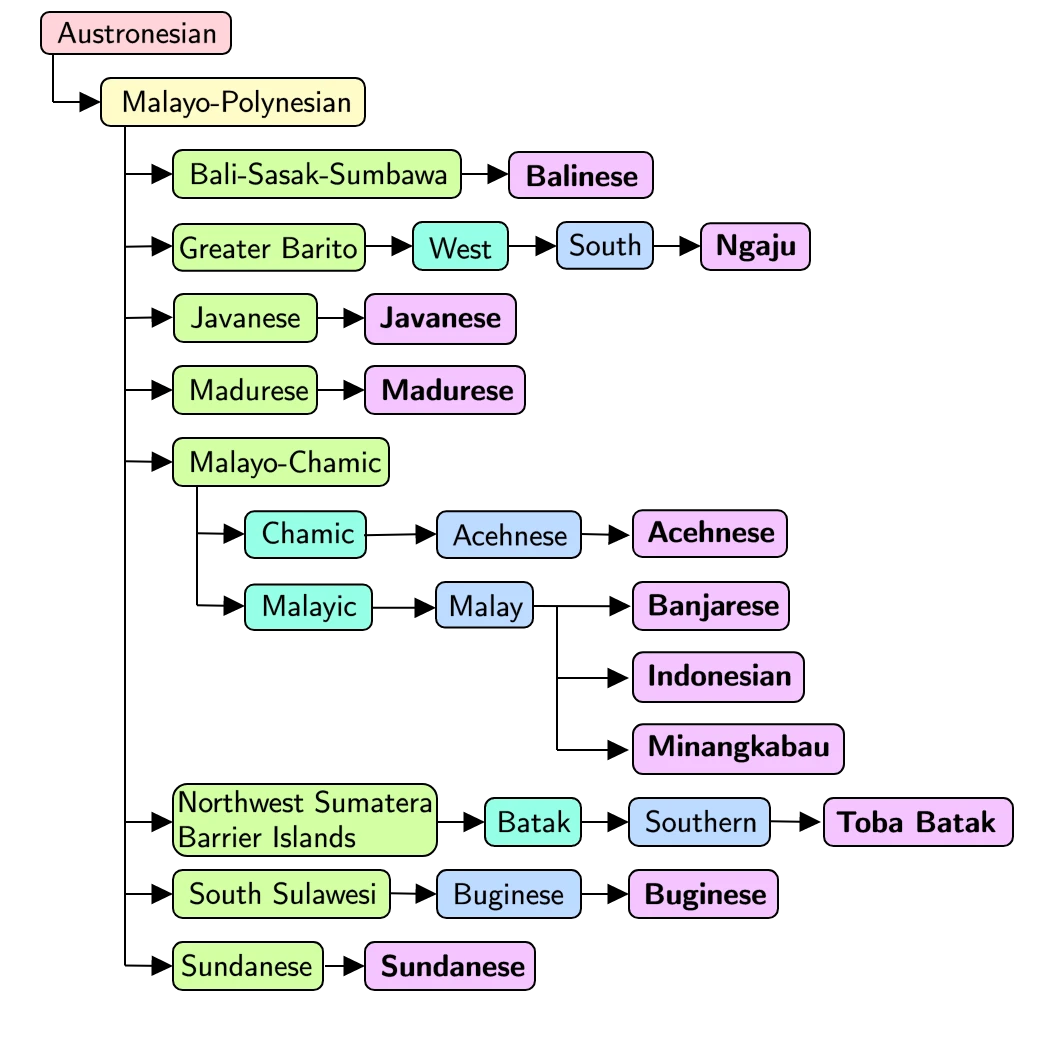

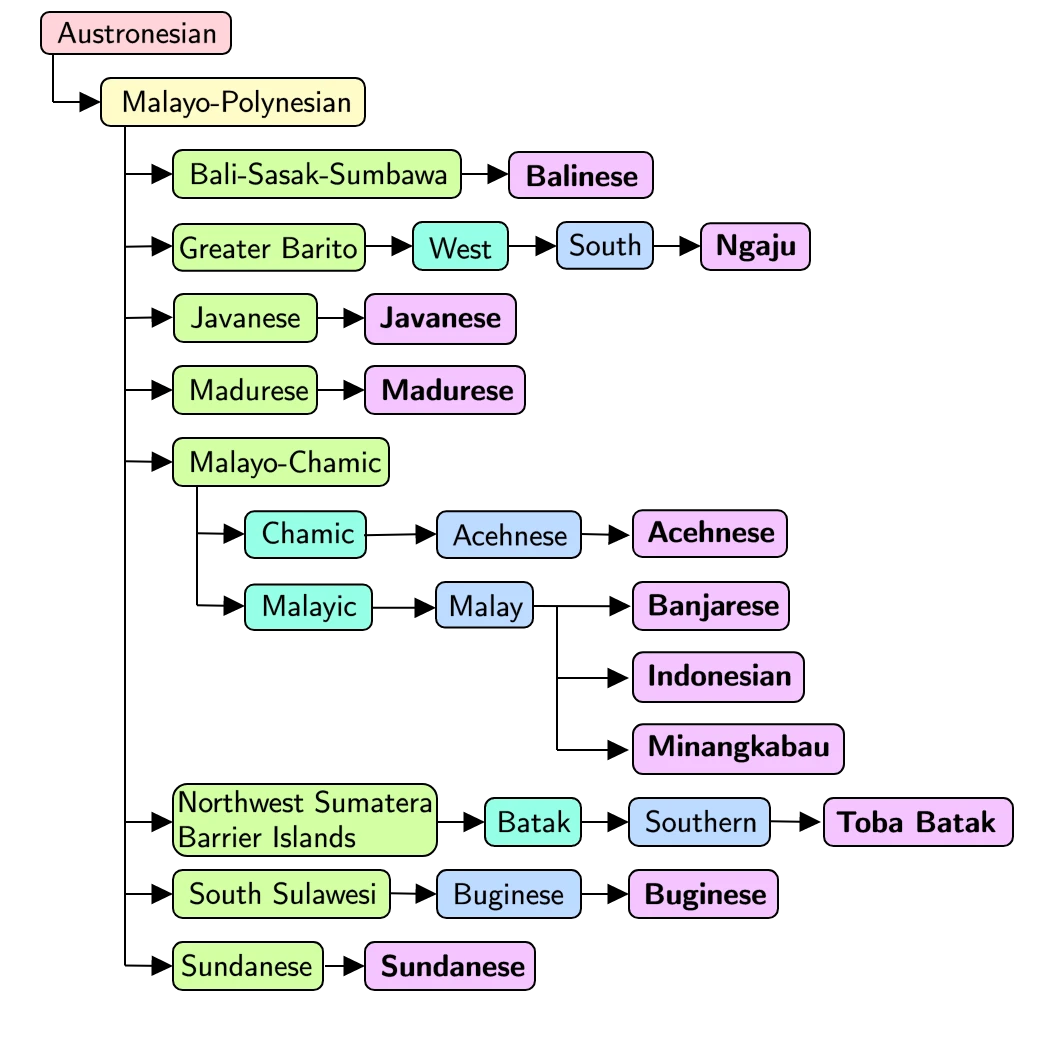

In this work, we focus on developing the first high-quality human annotated parallel corpus for 10 underrepresented languages used in Indonesia (see the figure below). This project is a year-round global open source collaboration, and we teamed up with computational linguists to further understand the linguistic features of each language.

Some of our languages are considered zero-resource languages, since no publicly accessible resources are available. Our resource includes sentiment and machine translation datasets, as well as bilingual lexicons. We provide extensive analysis and describe the challenges in creating such resources.

Our data collection process consists of several steps. First, we take an existing dataset in a high-resource local language (Indonesian) as a base for expansion to the other ten languages, and ask human annotators who are native to the languages to translate the text. To ensure the quality of the final translation, we perform quality assurance with additional human annotators.

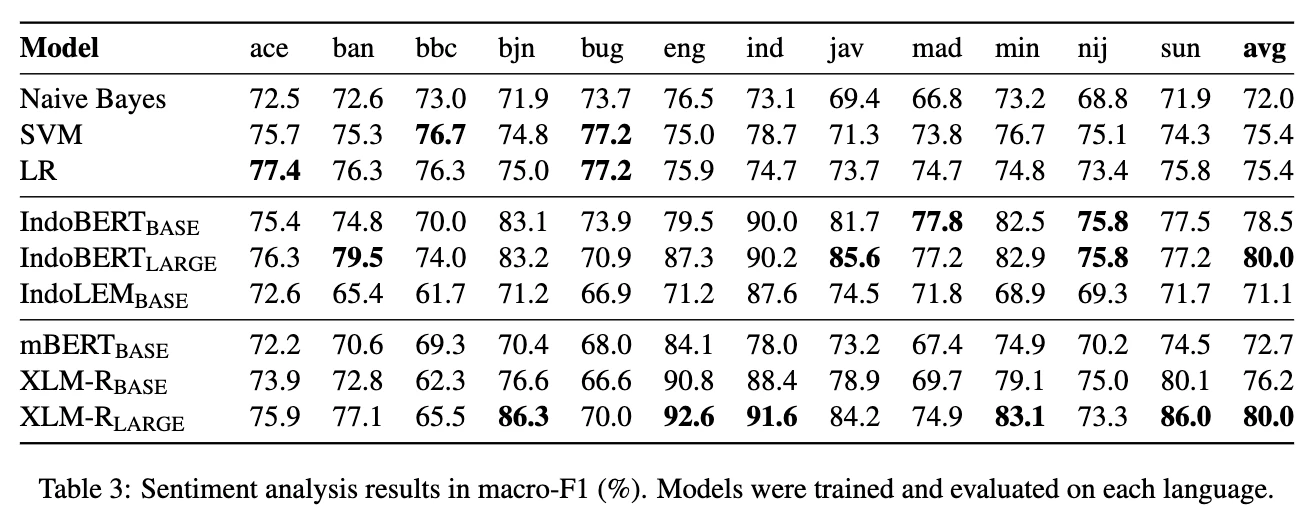

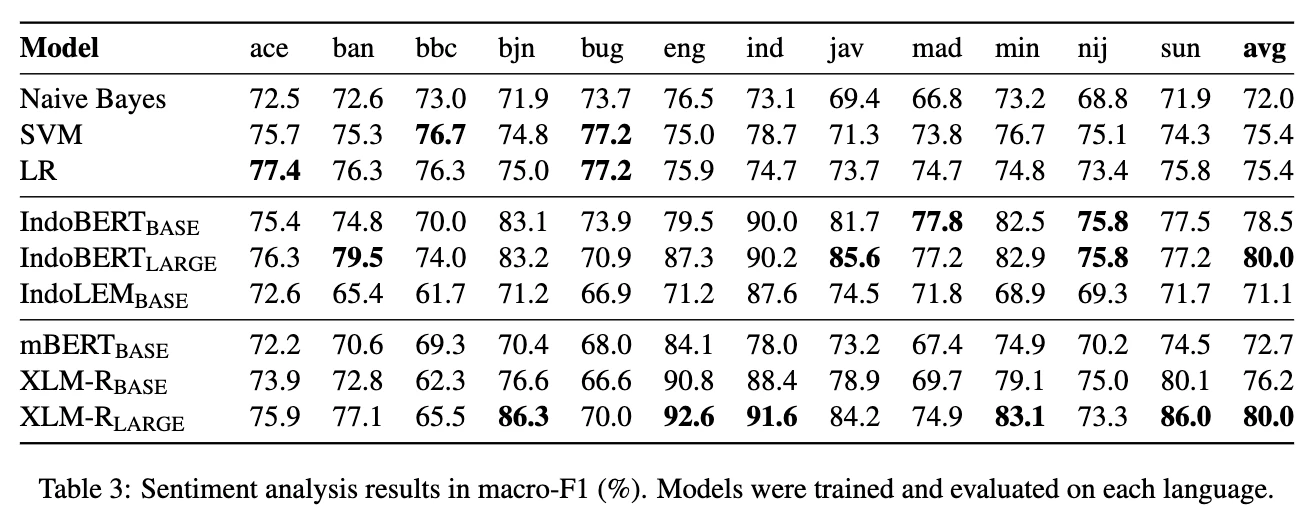

We provide an extensive evaluation of deep learning and classical NLP/machine learning methods on downstream tasks in few-shot and full-data settings. We conduct comprehensive analysis of the languages under study both from linguistic and empirical perspectives, the cross-lingual transferability of existing monolingual and multilingual language models (LMs), and an efficiency analysis of various methods for NLP tasks in extremely low-resource languages.

How does your research advance the state-of-the-art in the field of natural language processing?

Creating new resources is very important for underrepresented languages of Indonesia, such as Ngaju and Banjarese, which are spoken by hundreds of thousands of people. Despite being the world’s second most linguistically-diverse country, most languages in Indonesia are categorized as endangered, while some are even extinct. This work would be a catalyst to further help these languages be more known worldwide; by doing so, they can be better preserved.

This work opens a new possibility for model evaluation on unseen languages, where even the unlabeled data is unavailable for pretraining. Through it, we hope to spark more NLP research on Indonesian and other underrepresented languages.

Dataless Knowledge Fusion by Merging Weights of Language Models

Xisen Jin (Bloomberg), Xiang Ren (University of Southern California), Daniel Preotiuc-Pietro (Bloomberg), Pengxiang Cheng (Bloomberg)

ICLR Main Conference

Please summarize your research. Why are your results notable?

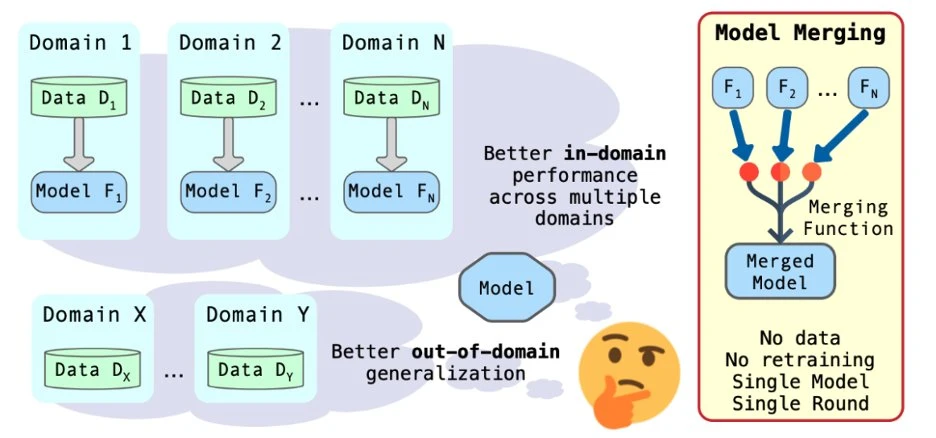

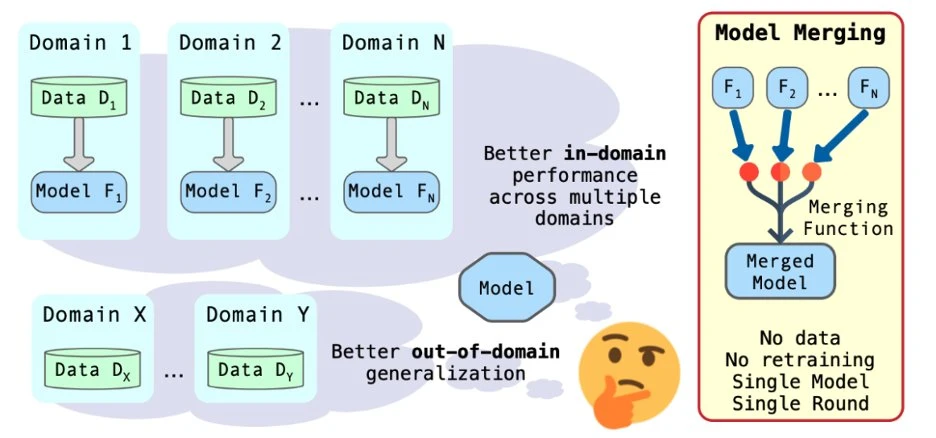

Pengxiang: Multi-task learning has shown that combining knowledge of multiple datasets in a single model can lead to better overall performance on both in-domain and out-of-domain data. The common practice of building a multi-domain/task model is to train a model by merging all datasets. But this can be expensive and repetitive as new datasets get added. Moreover, fine-tuned models are often readily available but their training data is not.

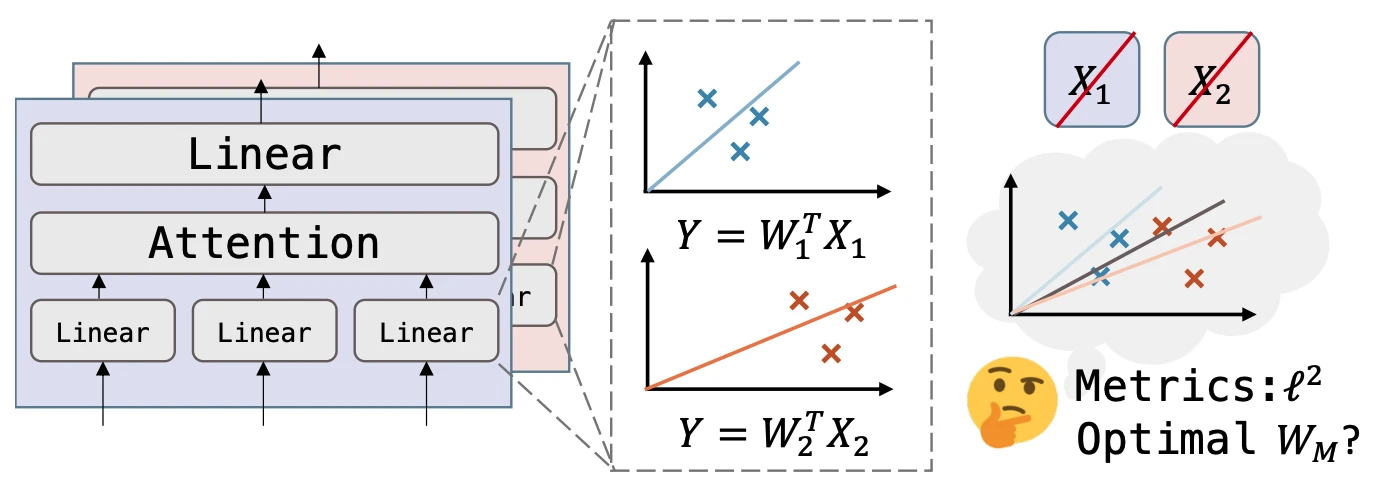

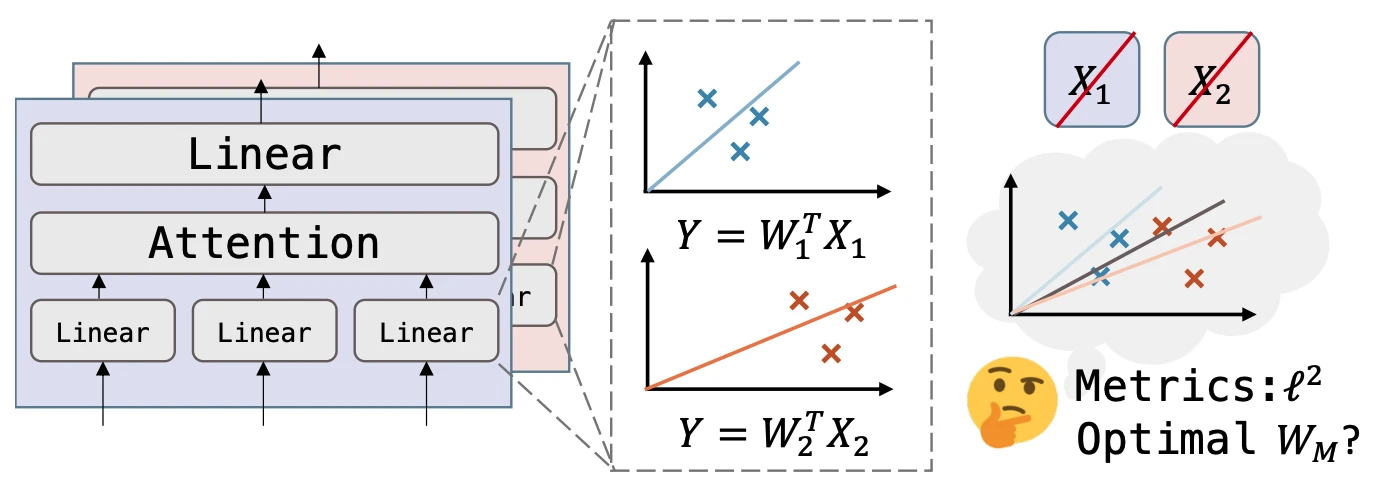

In this paper, we build a multi-domain/task model in seconds by merging individual models without accessing training data. A straightforward approach is to simply average up the weights of different models. However, we show this solution is not optimal, even for linear models.

Instead, we propose a closed-form solution that minimizes the L2 distances of outputs of individual models. Given the majority of layers in Transformer LMs are also linear, our approach, RegMean, independently merges each of them with this closed-form solution.

In our experiments, we merge models trained on different splits, domains, or tasks. We show that the proposed method produces a single model that can preserve or sometimes improve over the individual models without access to the training data. It also significantly outperforms baselines such as Fisher-weighted averaging or model ensembling.

How does your research advance the state-of-the-art in the field of natural language processing?

Fine-tuning pre-trained language models has become the prevalent paradigm for building downstream NLP models. We introduce a new model-merging method to combine the knowledge of multiple fine-tuned models without access to the training data. This method is particularly useful in cases when the original training data must be kept private to ensure data or annotation privacy or to guard intellectual property to annotations.

Model-merging can also be considered as a more practical alternative to model-ensembling, as the merged model has a similar number of parameters to any individual model. Model-merging algorithms can be applied to a wide range of problems, from efficient intermediary-task selection to improving performance of training models with private data in a federated learning setup.

We make our code for model-merging and examples publicly available to facilitate reproducibility and to serve as a starting point for future work on this topic: https://github.com/bloomberg/dataless-model-merging.