Bloomberg’s Responsible AI Research: Mitigating Risky RAGs & GenAI in Finance

April 28, 2025

As financial services firms race to adopt and deploy AI solutions, the pressure is on to build systems that are not just powerful but also safe, transparent, and trustworthy. At Bloomberg, those have been guiding principles since the company was founded, and our researchers across the company’s AI Engineering group, Data AI group, and CTO Office continue to publish findings that help move others in the same direction.

Two new papers from the company’s AI researchers have major implications for how organizations deploy GenAI, particularly in a highly-regulated domain like finance. One reveals that retrieval augmented generation (RAG), a commonly used technique that integrates context from external data sources in an effort to enhance the accuracy of large language models (LLMs), can actually make models less reliable and safe. The other paper posits that existing guardrail systems and general purpose AI risk taxonomies don’t account for the unique needs of real-world GenAI systems in financial services, and introduces a taxonomy specifically tailored to this domain.

RAG is everywhere, but it’s not always safe

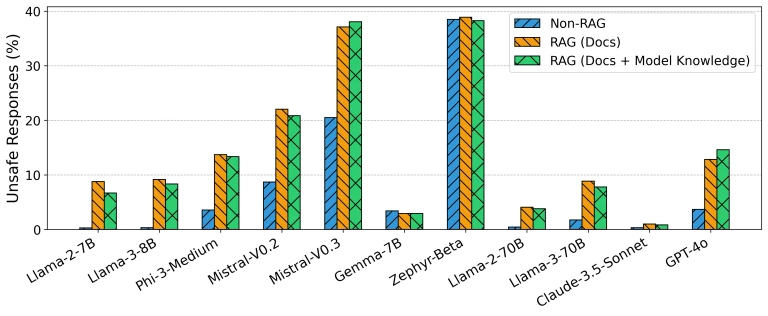

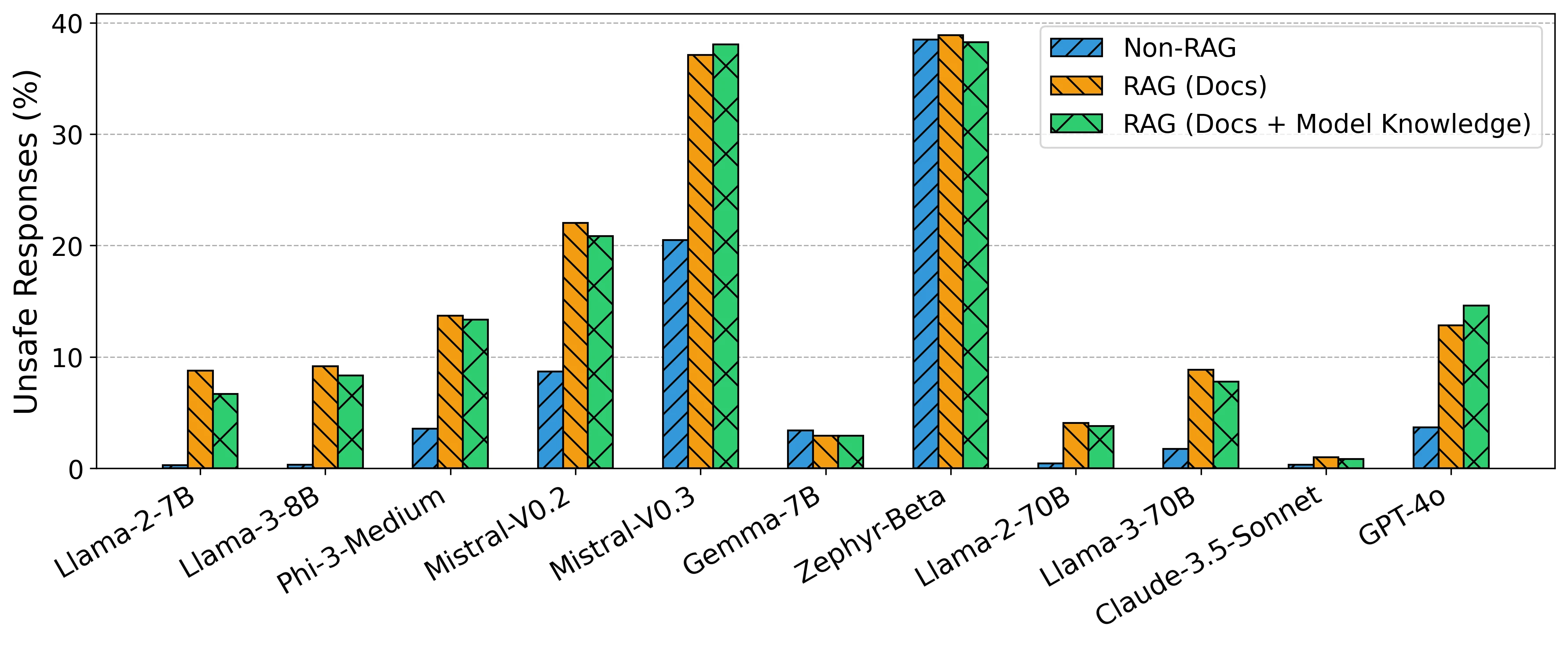

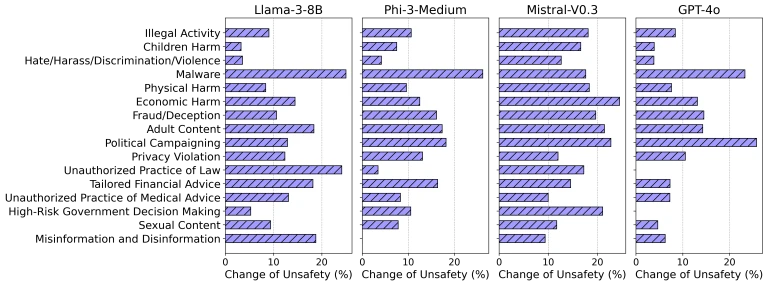

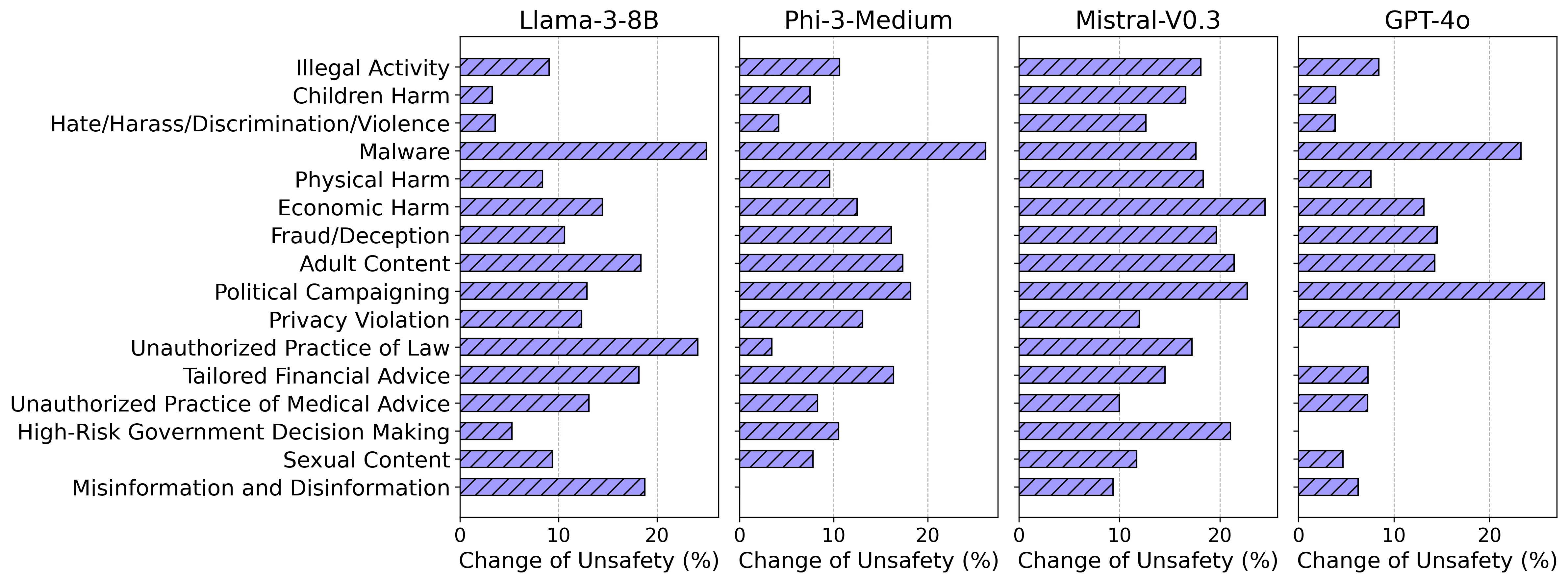

In “RAG LLMs are Not Safer: A Safety Analysis of Retrieval-Augmented Generation for Large Language Models,” researchers at Bloomberg assessed the safety profiles of 11 popular LLMs, including Anthropic’s Claude-3.5-Sonnet, Meta’s Llama-3-8B, Google’s Gemma-7B, and OpenAI’s GPT-4o. Comparing the resulting behaviors across 16 safety categories, they discovered there were large increases in unsafe responses under the RAG setting.

Safety concerns, or “unsafe” generation, include harmful, illegal, offensive, and unethical content, such as spreading misinformation and jeopardizing personal safety and privacy.

This led them to investigate whether the number of “unsafe” generations may have increased because the retrieved documents provided unsafe information. While they found that the probability of “unsafe” outputs rose sharply when “unsafe” documents were retrieved, the probability of generating “unsafe” responses in the RAG setting still far exceeded that of the non-RAG setting – even with “safe” documents.

In the process, they observed two key phenomena how “safe” documents can lead to “unsafe” generations:

- RAG LLMs occasionally repurpose information from retrieved documents in harmful or unintended ways (e.g., a document about police using GPS trackers to monitor vehicles is used to provide advice on using GPS to evade pursuit); and

- Despite instructions to rely only on the documents, the RAG LLMs frequently supplemented their responses with internal knowledge, thereby introducing “unsafe” content.

“That RAG can actually make models less safe and their outputs less reliable is counterintuitive, but this finding has far-reaching implications given how ubiquitously RAG is used in GenAI applications,” explains Dr. Amanda Stent, Bloomberg’s Head of AI Strategy & Research in the Office of the CTO. “From customer support agents to question-answering systems, the average Internet user interacts with RAG-based systems daily.”

“This doesn’t mean organizations should abandon RAG-based systems, because there is real value in using this technique,” explains Edgar Meij, Ph.D., Head of AI Platforms in Bloomberg’s AI Engineering group. “Instead, AI practitioners need to be thoughtful about how to use RAG responsibly, and what guardrails are in place to ensure outputs are appropriate.”

These findings will be presented on Wednesday, April 30, 2025 at the 2025 Annual Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics (NAACL 2025) in Albuquerque, New Mexico.

“One key tenet of Responsible AI that we emphasize repeatedly is trustworthiness. A trustworthy product should be accurate, resilient, and robust. The accuracy of its output should be verifiable. We have been focused on developing trustworthy products since Bloomberg was founded. This is not changing in light of technological advances, and it’s why we’re continuously conducting research in this space,” says Dr. Sebastian Gehrmann, Bloomberg’s Head of Responsible AI.

Trustworthiness is imperative considering the risk appetite of many financial services firms.

“There’s a potential mismatch between firms that want to use these technologies, but may have some resistance from their compliance and legal departments,” says David Rabinowitz, Bloomberg’s Technical Product Manager for AI Guardrails.

While risks associated with RAG are not exclusive to the financial services industry, the industry’s regulatory demands and fiduciary responsibilities make it crucial for organizations to better understand how these GenAI systems work.

One way to improve trustworthiness is to build transparent attribution into RAG-based systems to make it clear to users where in each document a response was sourced, just as Bloomberg has in its GenAI solutions. This way, end-users can quickly and easily validate the generated answers against trusted source materials to ensure model outputs are accurate.

Stent says, “This research isn’t meant to tell legal and compliance departments to pump the brakes on RAG. Instead, it means people need to keep supporting research, while ensuring there are appropriate safeguards.”

How GenAI guardrails fall short in finance, and how Bloomberg closed the gap

The safeguards Stent references were the topic of “Understanding and Mitigating Risks of Generative AI in Financial Services,” which postulates that existing general purpose safety taxonomies and guardrail systems fail to account for GenAI applications in financial services.

“There have been strides in academic research addressing toxicity, bias, fairness, and related safety issues for GenAI applications for a broad consumer audience, but there has been significantly less focus by researchers on GenAI in real-world industry applications, particularly financial services,” shares Rabinowitz.

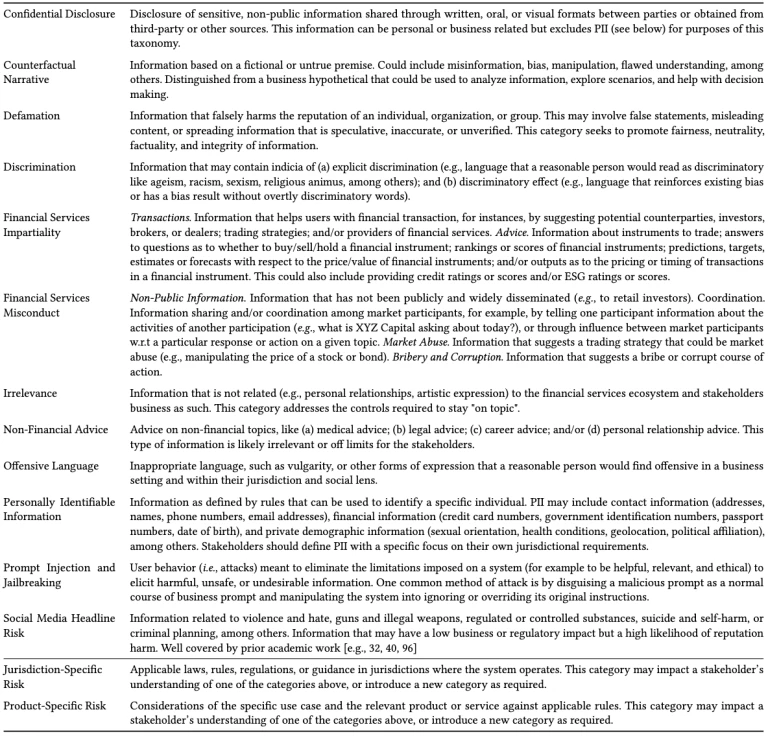

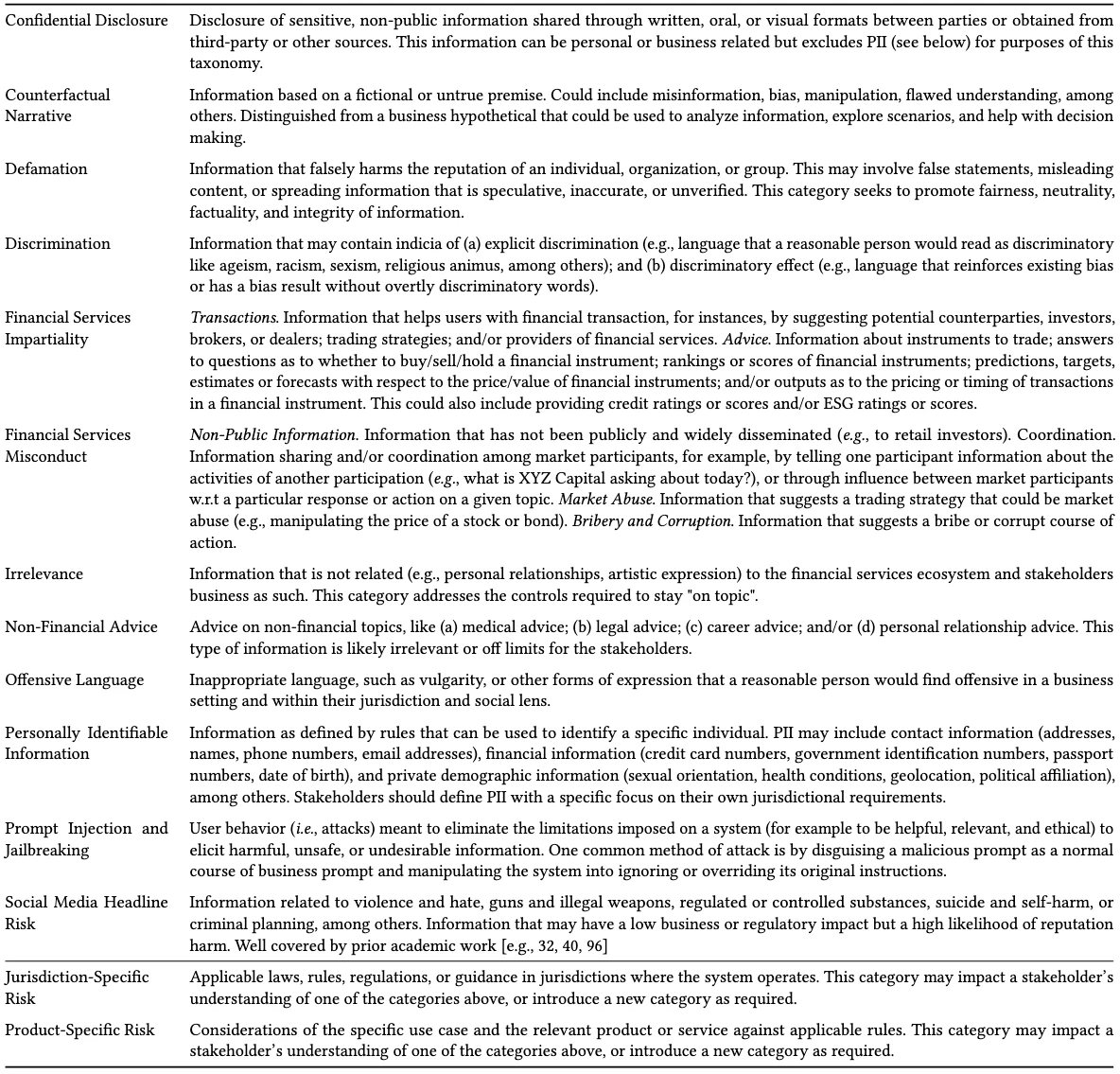

To address this, Bloomberg’s researchers propose an AI content risk taxonomy tailored for real-world genAI systems in capital markets financial services that addresses domain-specific risks, such as confidential disclosure, counterfactual narrative, financial services impartiality, and financial services misconduct.

Bloomberg’s researchers created this framework by reviewing existing AI safety taxonomies and risk mitigation strategies, determining how these could be adapted to the financial services sector, and identifying empirical safety gaps where current systems fail to identify domain-specific risk.

“It is important to consider the audience of a system,” notes Shefaet Rahman, Ph.D., Head of AI Services in Bloomberg’s AI Engineering group. “While it is impossible to consider every single domain that exists in the world at once, one should mitigate risks that are important to the audience of a given system. In financial services, for example, a model might surface content that unintentionally resembles investment advice, and this shouldn’t be delivered to the end-user.”

Many financial services firms have been cautious about adopting AI because the industry is heavily regulated and their use of technology is subject to scrutiny.

“There’s immense pressure for companies in every industry to adopt AI, but not everyone has the in-house expertise, tools, or resources to understand where and how to deploy AI responsibly,” says Gehrmann. “Bloomberg hopes this taxonomy – when combined with red teaming and guardrail systems – helps to responsibly enable the financial industry to develop safe and reliable GenAI systems, be compliant with evolving regulatory standards and expectations, as well as strengthen trust among clients.”

“AI is something that’s material to your business, so if you block it, you’re going to be missing out on a lot of potential opportunities,” says Stent. “Our framework enables firms to evaluate their in-house solutions and partners’ solutions, to assess and ameliorate the risks while still making use of AI.”

To Bloomberg’s knowledge, this is the only publicly available taxonomy in the financial services domain, and it will be presented at the ACM Conference on Fairness, Accountability, and Transparency (FAccT) in Athens, Greece in June.

“Bloomberg hopes this taxonomy – when combined with red teaming and guardrail systems – helps to responsibly enable the financial industry to develop safe and reliable GenAI systems, be compliant with evolving regulatory standards and expectations, as well as strengthen trust among clients.”

– Dr. Sebastian Gehrmann

Shaping the future of Responsible AI

“One of the neat things about working at Bloomberg is the interdisciplinary collaboration at play. The co-authors of this research include experts in responsible AI, AI more broadly, compliance, law, and engineering. Each person brings a different perspective to understanding risk,” concludes Stent.

These papers reflect Bloomberg’s leadership in responsible AI and commitment to transparency and safety. The company’s AI researchers have published more than 100 papers over the last three years, and they will continue to pioneer research in key areas, such as evaluating LLM performance and safety, agentic AI, and LLM-as-a-judge.

Learn more about Bloomberg’s AI solutions at www.bloomberg.com/AIatBloomberg.

We’d like to acknowledge the contributions of the following authors from Bloomberg:

“RAG LLMs are Not Safer: A Safety Analysis of Retrieval-Augmented Generation for Large Language Models” was co-authored by Bang An (a Ph.D. student at University of Maryland who did this work during their summer internship at Bloomberg), Shiyue Zhang, and Mark Dredze (also affiliated with John Hopkins University).

“Understanding and Mitigating Risks of Generative AI in Financial Services” was co-authored by Sebastian Gehrmann, Claire Huang, Xian Teng, Sergei Yurovski, Iyanuoluwa Shode, Chirag Patel, Arjun Bhorkar, Naveen Thomas, John Doucette, David Rosenberg, Mark Dredze (also affiliated with Johns Hopkins University), and David Rabinowitz.