Bloomberg’s AI Researchers Publish 7 Papers at EMNLP 2025

November 03, 2025

At the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP 2025) in Suzhou, China this week (November 4-9, 2025), researchers from Bloomberg’s AI Engineering group and its BLAW AI Engineering group are showcasing their expertise in natural language processing (NLP) and large language models, retrieval-augmented language models, question answering, information extraction and retrieval, and more by publishing three (3) papers in the main conference and four (4) papers in Findings of the ACL: EMNLP 2025. Through these papers, the authors and their research collaborators highlight a variety of use cases and applications, novel approaches and improved models used in key tasks, and other advances to the state-of-the-art in the field of NLP.

In addition, BLAW AI engineer Leslie Barrett and Bloomberg AI Research Scientist Daniel Preoţiuc-Pietro are two of the organizers of the Natural Legal Language Processing Workshop taking place at EMNLP 2025 on November 8, 2025. The goal of this full-day workshop, which features more than 35 presentations this year, is to bring together researchers and practitioners from around the world who develop NLP techniques for legal data. Daniel also served on the conference’s Best Paper Committee.

We asked some of our authors to summarize their research and explain why their results were notable:

Improving Instruct Models for Free: A Study on Partial Adaptation

Ozan İrsoy (Bloomberg), Pengxiang Cheng (Bloomberg), Jennifer L. Chen (Independent Researcher), Daniel Preoţiuc-Pietro (Bloomberg), Shiyue Zhang (Bloomberg), Duccio Pappadopulo (Bloomberg)

Virtual Poster Session (Wednesday, November 5 @ 8:00–9:00 AM CST)

Please summarize your research. Why are your results notable?

Shiyue: One common use for LLMs is to perform so called “few-shot in-context learning” tasks, where the LLM is provided with a prompt containing a task description and a few examples of how to perform the task. Instruct models are best in this setup (e.g., for classification-based tasks using a few examples in the prompt).

In this paper, we show that we can obtain even better results by doing a weighted merging of model weights between the base model, obtained after only pretraining, and the instruct model, which underwent rounds of post-training using supervised or feedback data.

We obtain performance increases of on average 1.3% on 17 out of 18 open-weight models we tested (incl. Llama, Mistral, OLMo, and Gemma) on 21 datasets spanning six broad types of tasks, such as classification, named-entity recognition (NER), and extractive QA. As expected, instruction-following abilities degrade (as measured using AlpacaEval 2.0), but for common adaptation weights the degradation is small, while gains on in-context learning are still significant.

How does your research advance the state-of-the-art in the field of natural language processing?

Duccio: Most open-weight LLMs, such as the Llama, Qwen or OLMo series, are provided in both base and instruct versions. Our paper shows that if in-context learning is the application for the LLM, one can obtain a better model by performing a weighted average over the two versions of the model. In addition to being directly useful, this finding also highlights the learning dynamics in post-training is still not fully understood and more potential efficiencies remain to be unlocked.

Can LLMs Find a Needle in a Haystack? A Look at Anomaly Detection

Leslie Barrett (Bloomberg), Vikram Sunil Bajaj (Bloomberg), Robert Kingan (Bloomberg)

Virtual Poster Session (Wednesday, November 5 @ 8:00–9:00 AM CST)

Please summarize your research. Why are your results notable?

Leslie: Anomaly Detection (AD), also known as Outlier Detection, is a long-standing problem in machine learning that has recently been applied to text data. Our research introduces a novel approach utilizing Large Pretrained Language Models (LLMs) for textual anomaly detection across binary, multi-class, and unlabeled modalities. We use commonly-used public datasets for this task, including 20Newsgroups, Reuters-21578, and WikiPeople, each of which denote outlier and inlier texts using topic labels and have varied concentrations of inlier and outlier texts.

The binary modality frames the problem as a binary classification task (inlier/outlier), the multi-class modality is a multi-class topic classification, and the unlabeled modality asks, “Which of these texts do not belong?”

A key finding is that LLMs, specifically GPT-4o and Claude-3.5-Sonnet, surpass baselines when anomaly detection is framed as an imbalanced classification problem. However, a significant limitation was observed in unlabeled anomaly detection, where LLMs performed poorly, suggesting a struggle with identifying anomalies without explicit class information. These results also did not show any sensitivity to the concentrations of anomalous texts that previous studies suggested.

How does your research advance the state-of-the-art in the field of natural language processing?

Our research provides a deeper understanding of LLM’s capabilities and, more importantly, their limitations in textual anomaly detection. It pushes the boundaries of their application in such textual analysis tasks by identifying specific contexts where LLMs excel, as well as where further research is needed to enhance their performance, particularly in generalized, unlabeled textual anomaly discovery.

Make it happen here.

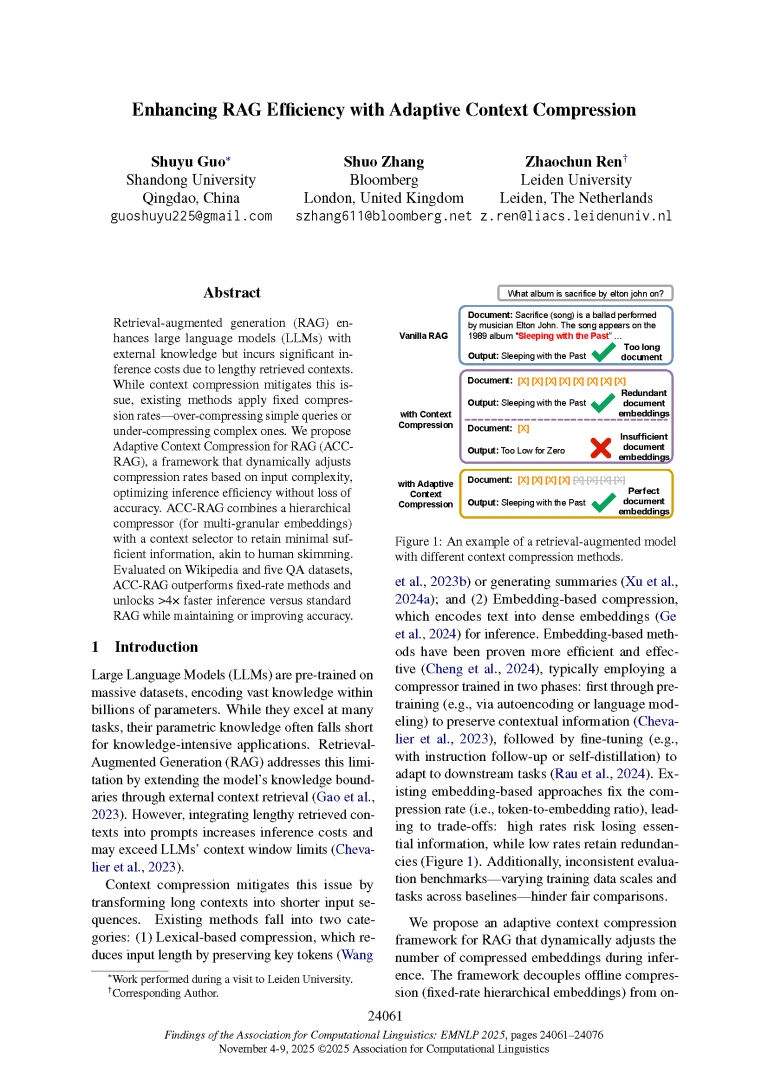

Enhancing RAG Efficiency with Adaptive Context Compression

Shuyu Guo (Shandong University), Shuo Zhang (Bloomberg), Zhaochun Ren (Leiden University)

Hall C Level 3 – Session 3: Findings 1 (Wednesday, November 5 @ 1:00 -2:00 PM CST)

Please summarize your research. Why are your results notable?

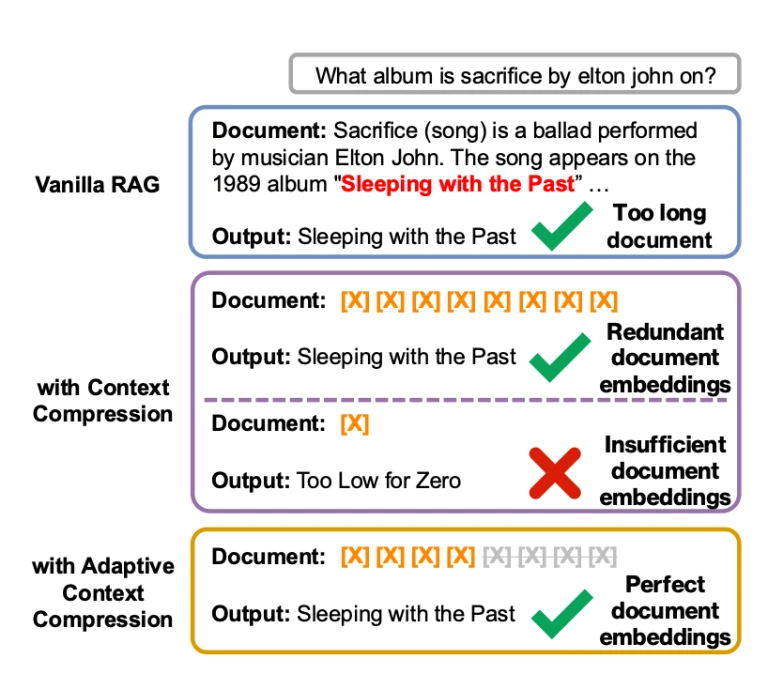

Shuo: Our research tackles a growing challenge in retrieval-augmented generation (RAG), or AI systems that combine search with large language models. While RAG is powerful because it gives the AI system access to outside knowledge, it often slows the system down dramatically since the model has to read and process huge chunks of retrieved text, much of which is repetitive or unnecessary.

To solve this, we introduced Adaptive Context Compression for RAG (ACC-RAG). Think of it as a way to teach the AI system to “skim smartly” — similar to how people don’t read every single word in an article but focus on the most relevant parts. Our system automatically decides how much information to keep depending on the difficulty of the question: simple questions get a lighter, faster summary, while tougher ones keep more details.

In our paper, Figure 1 (above) illustrates this with a simple example: determining which album the song “Sacrifice” by Elton John can be found on. Standard RAG either overloads the model with too much text or, when compressed in a fixed way, cuts out key details — both of which lead to mistakes. In contrast, our adaptive method keeps just the right amount of context, allowing the system to give the correct answer.

The results are exciting:

- Over 4x faster inference compared to standard RAG

- Accuracy that is equal to, or even better than, existing methods

- Strong performance not just in one setting, but across five widely used question-answering benchmarks

In short, we show that efficiency doesn’t need to come at the cost of accuracy — and this technique opens the door for faster, more scalable AI systems.

How does your research advance the state-of-the-art in the field of natural language processing?

Today’s state-of-the-art AI models are becoming more capable, but they also consume more energy and time when faced with longer inputs. Our work directly addresses this by introducing adaptivity into compression — instead of applying a one-size-fits-all reduction, our method dynamically adjusts in real time.

This is the first unified framework to combine:

- Hierarchical compression (breaking text into different levels of detail), and

- Adaptive selection (deciding on the fly how much detail is enough).

This approach makes RAG systems both smarter and more sustainable, enabling them to more efficiently serve real-world applications like search assistants, chatbots, or enterprise knowledge systems. We also set up a fair benchmark so that future research on context compression can be compared under consistent conditions.

By showing that faster can still mean better, our work takes a key step toward practical, responsible AI deployment.

Can LLMs Be Efficient Predictors of Conversational Derailment?

Kaustubh Olpadkar (Bloomberg), Vikram Sunil Bajaj (Bloomberg), Leslie Barrett (Bloomberg)

Virtual Poster Session (Thursday, November 6 @ 8:00–9:00 AM CST)

Please summarize your research. Why are your results notable?

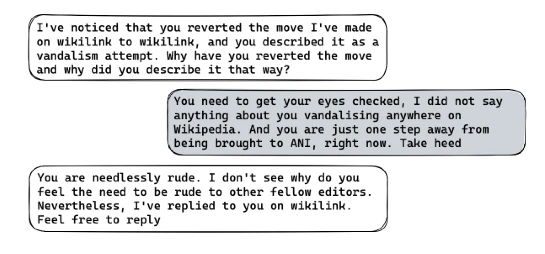

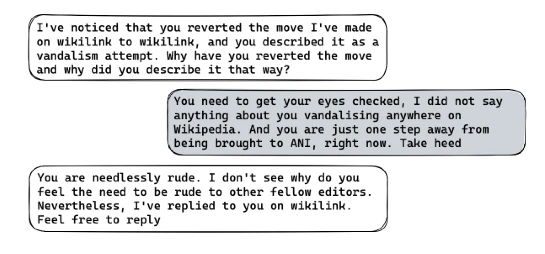

Kaustubh: Online discussions often start civilly but can quickly derail into personal attacks and toxic behavior. The challenge for online platforms is to catch this shift before it happens, not just clean up the mess afterward. Building specialized AI models for this is often slow and expensive.

Our research explored a simpler, more direct approach: can we use general-purpose Large Language Models (LLMs) like GPT-4o and Claude 3.5 Sonnet to predict conversational derailment “out of the box”? We did this without any costly, task-specific fine-tuning. Instead, we rely on carefully designed instructions, or prompts.

The results are notable because this simple method works remarkably well. Our experiments on public conversational datasets from Wikipedia and Reddit show that prompted LLMs can match and even outperform highly specialized models. Crucially, they can also forecast derailment earlier in the conversation, giving human moderators more time to intervene. This makes powerful, proactive moderation tools much more accessible, enabling platforms to deploy effective solutions quickly without a massive engineering effort.

How does your research advance the state-of-the-art in the field of natural language processing?

This research advances the state-of-the-art by demonstrating a paradigm shift for a complex forecasting task. Previously, the cutting edge relied on specifically creating specialized, complex neural architectures (like graph-based networks or hierarchical transformers) for predicting derailment. We show that massive, pre-trained LLMs already possess this capability and can be guided to achieve state-of-the-art results through prompting alone.

Furthermore, our work also introduces a pragmatic focus on the cost-performance trade-off, an element crucial for real-world applications that is often overlooked in research. We provide a clear analysis that shows how organizations can choose the right AI model for their budget. For example, smaller models like GPT-4o-mini offer nearly competitive accuracy at a fraction of the cost of their larger counterparts.

By establishing that off-the-shelf models can achieve superior results, especially in providing earlier warnings about derailment, our work sets a new, more practical benchmark for the field and opens a path for more scalable and efficiently deployed moderation systems.

STARQA: A Question Answering Dataset for Complex Analytical Reasoning over Structured Databases

Mounica Maddela (Bloomberg), Lingjue Xie (Bloomberg), Daniel Preoţiuc-Pietro (Bloomberg), Mausam** (Yardi School of Artificial Intelligence, Indian Institute of Technology, Delhi)

Hall C Level 3 – Session 11: Orals/Posters E | Poster & Demo Presentations (Thursday, November 6 @ 4:30-6:00 PM CST)

Please summarize your research. Why are your results notable?

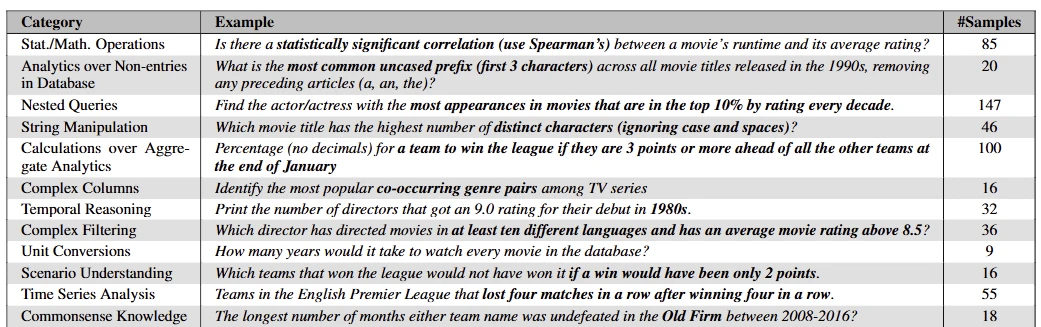

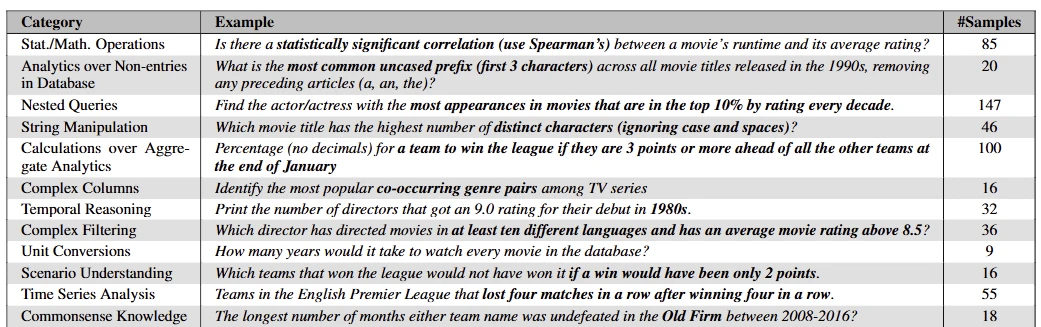

Mounica: We introduce a new human-created question-answer dataset of ~400 complex analytical reasoning questions over SQL databases. Each question requires one or more types of reasoning, such as math operations, time-series analysis, nested queries, calculations over aggregate analytics, temporal reasoning, and scenario understanding. The dataset includes questions on three specialized domain databases on movies, football, and e-commerce. Examples of questions can be seen in the Figure.

We benchmark existing state-of-the-art commercial and open-weight LLMs on our dataset and find that the dataset is quite challenging, with the best performance reaching up to 48.3%. The types of reasoning required for answering STARQA questions raise a question about whether SQL syntax alone is the best choice for obtaining the correct answer.

Cases that require operations such nested loops, complex conditional logic or data manipulation can lead to very complex SQL syntax and long queries, which can be cumbersome for a model to generate. To obtain the best performance, we introduce the approach of decomposing the problem of generating an answer in multiple steps to be executed in different languages: SQL is responsible for data fetching, while Python more naturally performs reasoning and analytics.

How does your research advance the state-of-the-art in the field of natural language processing?

Obtaining answers to complex analytical questions over specialized databases – or potentially proprietary ones – is a cornerstone of financial analysts’ work. LLMs that convert natural language queries to SQL statements can facilitate this; yet, they still lack the ability to reliably answer questions of greater complexity that are realistic.

We believe our dataset can facilitate future developments in this domain by allowing everyone to benchmark LLMs on the types of reasoning categories we introduce. Furthermore, our proposed framework for answering questions by decomposing the solution across multiple programming languages can be further extended to agentic workflows that plan, examine, and correct their mistakes or invoke other tools, as well as to other combinations of programming languages.

**most of the work was done when the author was on a sabbatical at Bloomberg

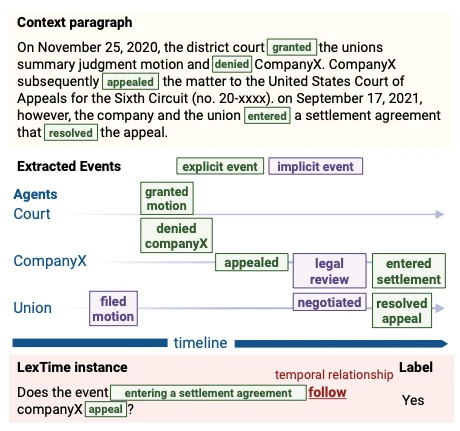

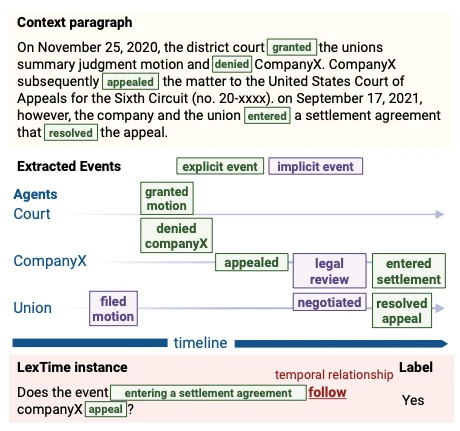

LEXTIME: A Benchmark for Temporal Ordering of Legal Events

Claire Barale** (University of Edinburgh), Leslie Barrett (Bloomberg), Vikram Sunil Bajaj (Bloomberg), Michael Rovatsos (University of Edinburgh)

Session 14: Findings 3 (Friday, November 7 @ 12:30–1:30 PM CST)

Please summarize your research. Why are your results notable?

Vikram: Our research contributions are twofold: First, we provide a dataset of paired events from U.S. court complaints that are annotated for event ordering. Second, we show results from LLMs on the task of predicting event ordering in this legal dataset compared to predictions on a benchmark event-ordering dataset. LLMs perform well despite certain complexities of legal language. This suggests that the longer contexts found in legal language may benefit large models.

How does your research advance the state-of-the-art in the field of natural language processing?

Our findings show that while LLMs outperform narrative benchmarks, accuracy is constrained by the complexities of legal discourse, such as paraphrasing. We show that longer input contexts and implicit/explicit event pairs improve model accuracy, offering practical insights for optimizing LLM-based legal NLP applications. Yet, Chain of Thought (CoT) prompting does not improve accuracy. These results highlight the need for domain adaptation, with future work having the potential to exploit identified errors and linguistic structure to align models with domain-specific language.

**work done during the author’s Bloomberg Data Science Ph.D. Fellowship

Calibrating LLMs for Text-to-SQL Parsing by Leveraging Sub-clause Frequencies

Terrance Liu* (Carnegie Mellon University), Shuyi Wang (Bloomberg), Daniel Preoţiuc-Pietro (Bloomberg), Yash Chandarana (Bloomberg), Chirag Gupta (Bloomberg)

Hall C Level 3 – Session 15: Orals/Posters G | Poster & Demo Presentations (Friday, November 7 @ 2:00-3:30 PM CST)

Please summarize your research. Why are your results notable?

Chirag: LLMs are notorious for being confident when providing answers, even when these are incorrect. Calibration represents the ability of a model to produce an accurate uncertainty score associated with its produced output.

In this paper, we introduce a new method for calibrating the outputs of text-to-SQL queries from LLMs that can be applied post-hoc (or without model retraining). This method is named multivariate Platt scaling and it uses SQL sub-clause frequency over multiple outputs sampled from an LLM as a finer-grained signal of uncertainty. A model for post-hoc calibration is trained over these frequencies to obtain better calibration performance on the popular Spider and Bird benchmarks as compared to only using output consistency. This method can then be applied post-hoc, without LLM re-training.

How does your research advance the state-of-the-art in the field of natural language processing?

Code generation and semantic parsing enable the creation of natural language interfaces that can interact with the trove of data available in structured repositories, such as relational databases. Prior to our work, calibration has mostly been studied for tasks such as classification and regression, but we benchmark calibration and propose a new calibration method for the complex task of semantic parsing.

The ability to provide well-calibrated answers from a semantic parser has multiple applications such as: a) detecting errors in generations in order to attempt corrections before showing the output to users; b) enabling straight-through processing when the LLM is confident; and c) generating suggestions and asking the user for clarifications or suggesting when the model is not confident.